Last updated: May 2023

2023

A Cookbook of Self-Supervised Learning

Randall Balestriero, Mark Ibrahim, Vlad Sobal*, Ari S Morcos*, Shashank Shekhar*, Tom Goldstein*, Florian Bordes*, Adrien Bardes*, Gregoire Mialon*, Yuandong Tian*, Avi Schwarzschild*, Andrew Gordon Wilson*, Jonas Geiping*, Quentin Garrido*, Pierre Fernandez*, Amir Bar*, Hamed Pirsiavash*, Yann LeCun*, and Micah Goldblum*,

*equal contribution

arXiv, 2023.

arXiv Tweet

title={A Cookbook of Self-Supervised Learning},

author={Randall Balestriero and Mark Ibrahim and Vlad Sobal and Ari Morcos and Shashank Shekhar and Tom Goldstein and Florian Bordes and Adrien Bardes and Gregoire Mialon and Yuandong Tian and Avi Schwarzschild and Andrew Gordon Wilson and Jonas Geiping and Quentin Garrido and Pierre Fernandez and Amir Bar and Hamed Pirsiavash and Yann LeCun and Micah Goldblum},

year={2023},

eprint={2304.12210},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

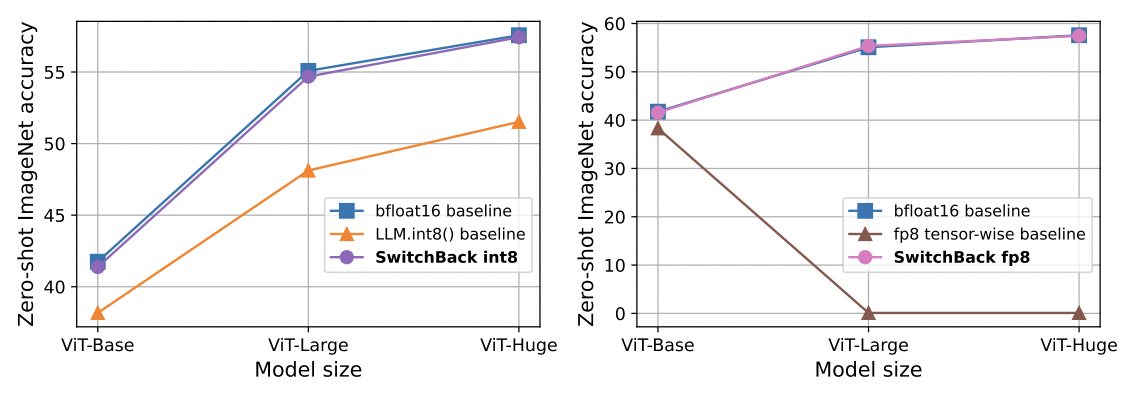

Stable and low-precision training for large-scale vision-language models

Mitchell Wortsman*, Tim Dettmers*, Luke Zettlemoyer, Ari S Morcos, Ali Farhadi, and Ludwig Schmidt

*equal contribution

arXiv, 2023.

PDF arXiv Tweet

title={Stable and low-precision training for large-scale vision-language models},

author={Mitchell Wortsman and Tim Dettmers and Luke Zettlemoyer and Ari Morcos and Ali Farhadi and Ludwig Schmidt},

year={2023},

eprint={2304.13013},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

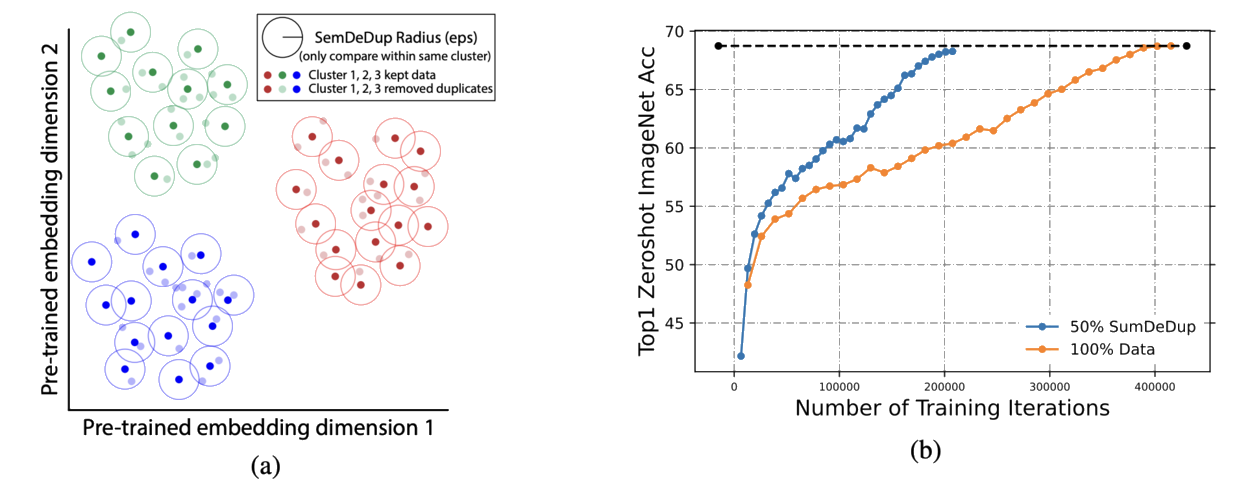

SemDeDup: Data-efficient learning at web-scale through semantic deduplication

Amro Abbas, Kushal Tirumala, Dániel Simig, Surya Ganguli, and Ari S Morcos

arXiv, 2023.

Also appeared in:

ICLR Workshop on Multimodal Representation Learning, 2023.

Workshop Best Paper Award

PDF arXiv Tweet

title={SemDeDup: Data-efficient learning at web-scale through semantic deduplication},

author={Amro Abbas and Kushal Tirumala and Dániel Simig and Surya Ganguli and Ari S. Morcos},

year={2023},

eprint={2303.09540},

primaryClass={cs.LG}

}

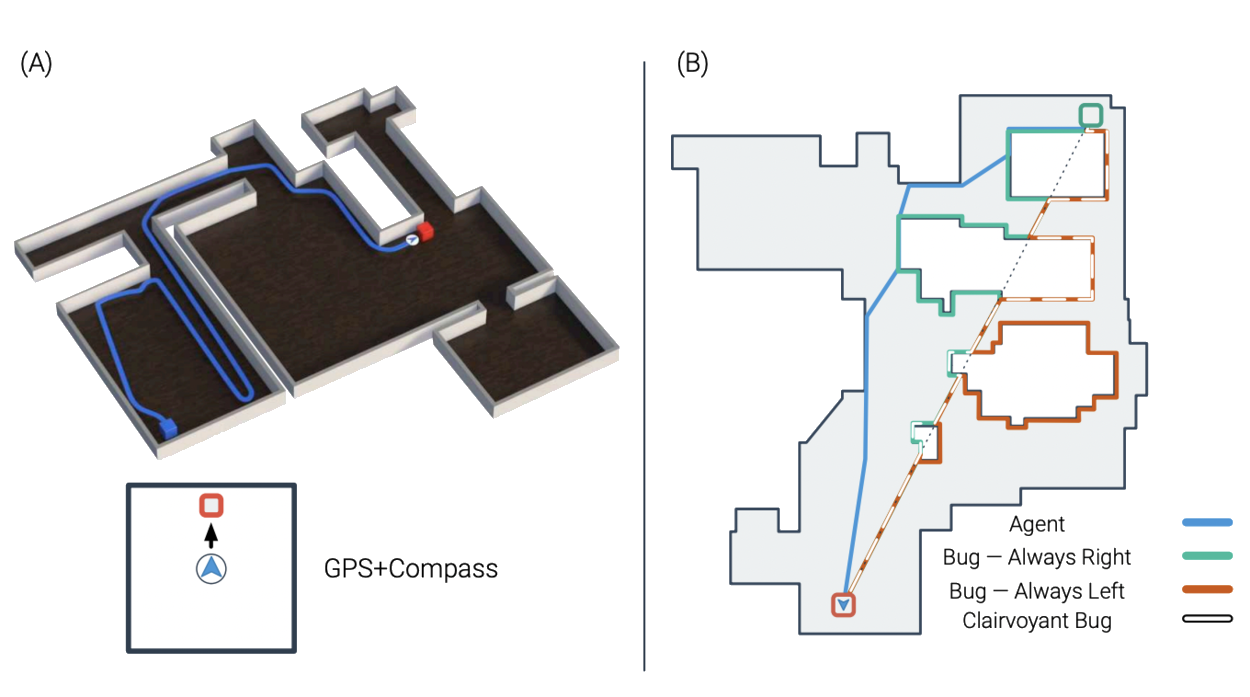

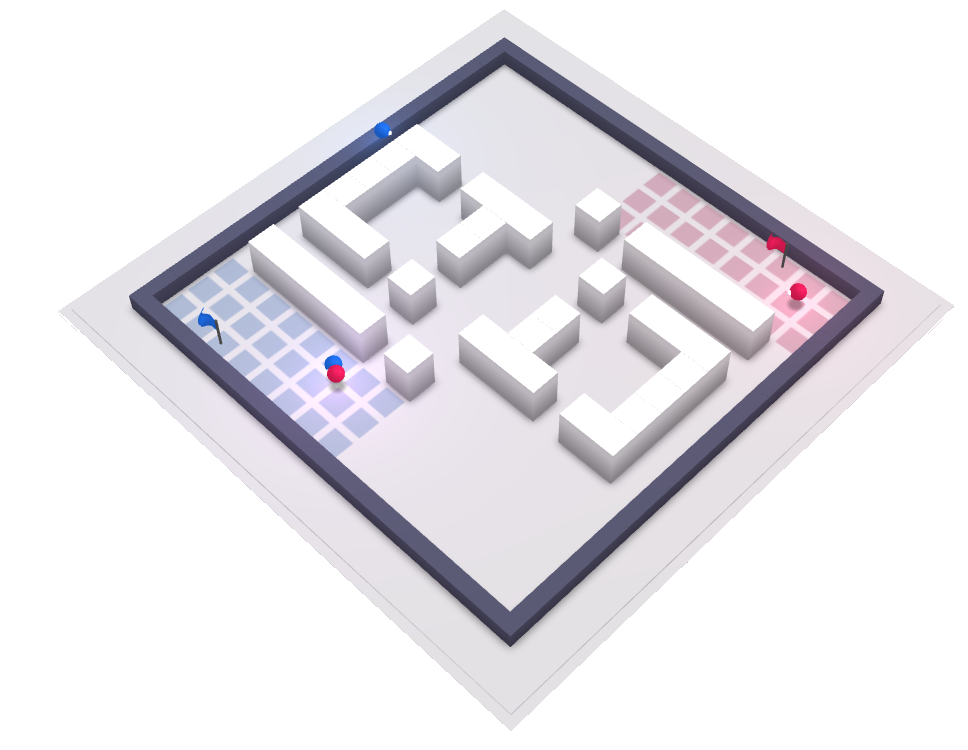

Emergence of Maps in the Memories of Blind Navigation Agents

Erik Wijmans, Manolis Savva, Ifran Essa, Stefen Lee, Ari S Morcos, and Dhruv Batra,

International Conference on Learning Representations Oral (Notable, Top 5%), 2023.

Outstanding Paper Award

PDF arXiv Tweet ICLR Webpage

wijmans2023emergence,

title={Emergence of Maps in the Memories of Blind Navigation Agents},

author={Erik Wijmans and Manolis Savva and Irfan Essa and Stefan Lee and Ari S. Morcos and Dhruv Batra},

booktitle={International Conference on Learning Representations},

year={2023},

url={https://openreview.net/forum?id=lTt4KjHSsyl}

}

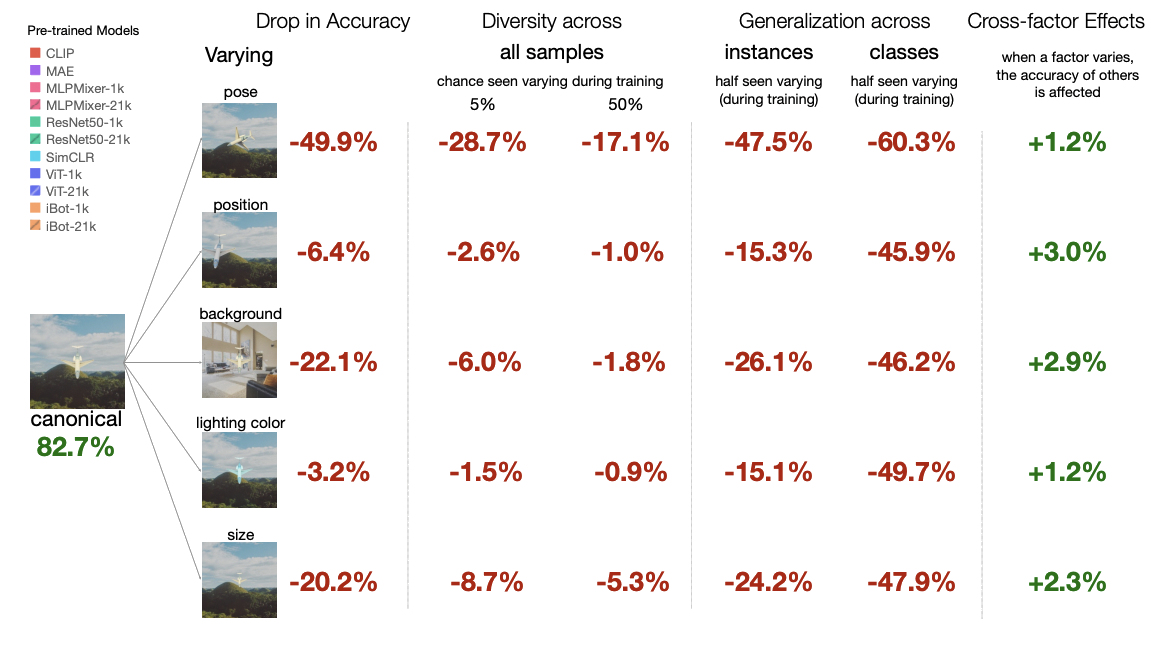

The Robustness Limits of SoTA Vision Models to Natural Variation

Mark Ibrahim, Quentin Garrido, Ari S Morcos, and Diane Bouchacourt

Transactions on Machine Learning Research, 2023.

PDF arXiv TMLR

url = {https://openreview.net/forum?id=QhHLwn3D0Y},

author = {Ibrahim, Mark and Garrido, Quentin and Morcos, Ari and Bouchacourt, Diane},

title = {The Robustness Limits of SoTA Vision Models to Natural Variation},

publisher = {Transactions of Machine Learning Research},

year = {2023},

}

2022

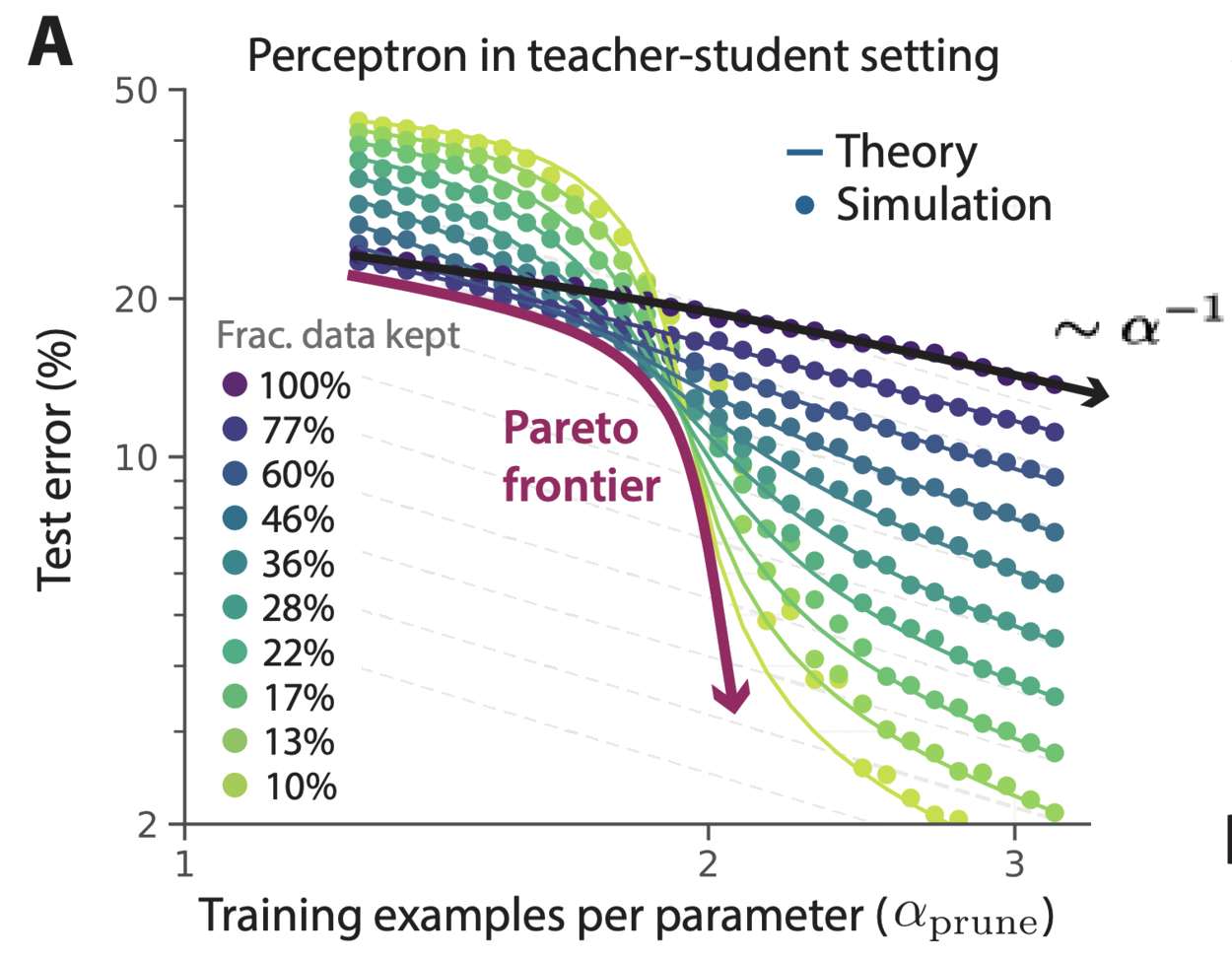

Beyond neural scaling laws: beating power law scaling via data pruning

Ben Sorscher*, Robert Geirhos*, Shashank Shekhar, Surya Ganguli§, and Ari S Morcos§

*joint first authors §joint senior authors

Neural Information Processing Systems (NeurIPS) Oral, 2022.

Outstanding Paper Award

PDF arXiv NeurIPS Tweet Poster

sorscher2022beyond,

title={Beyond neural scaling laws: beating power law scaling via data pruning},

author={Ben Sorscher and Robert Geirhos and Shashank Shekhar and Surya Ganguli and Ari S. Morcos},

booktitle={Advances in Neural Information Processing Systems},

editor={Alice H. Oh and Alekh Agarwal and Danielle Belgrave and Kyunghyun Cho},

year={2022},

url={https://openreview.net/forum?id=UmvSlP-PyV}

}

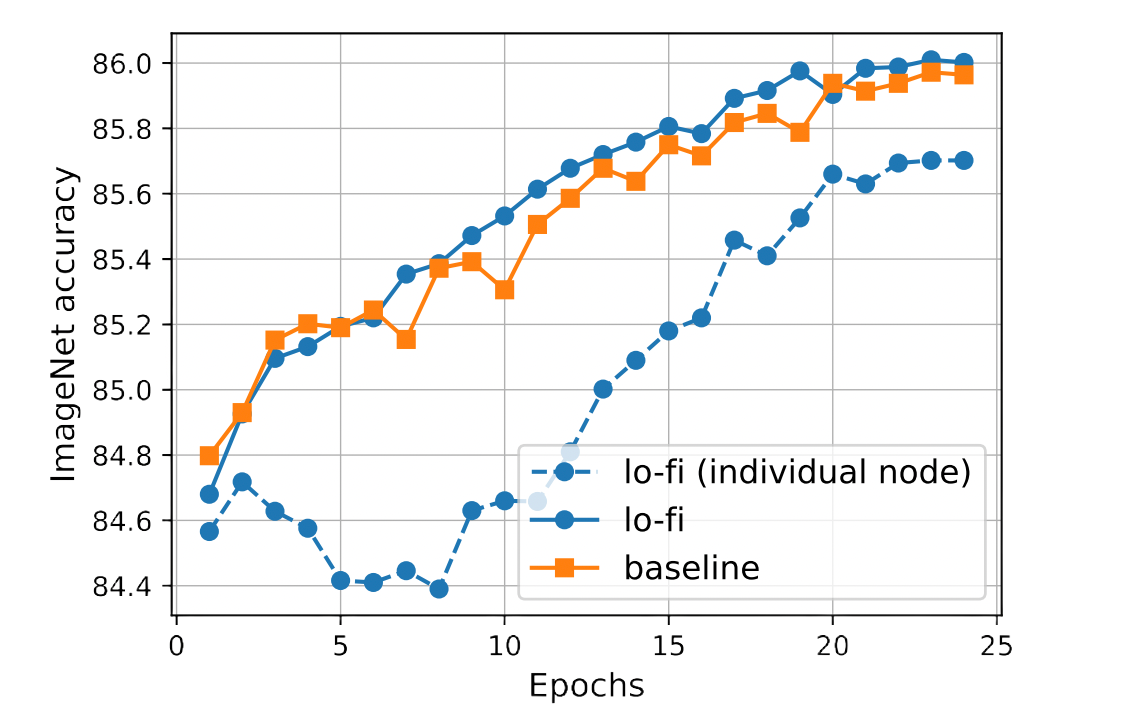

lo-fi: distributed fine-tuning without communication

Mitchell Wortsman, Suchin Gururangan, Shen Li, Ali Farhadi, Ludwig Schmidt, Michael Rabbat, and Ari S Morcos

Transactions on Machine Learning Research, 2022.

PDF arXiv Tweet arXiv

wortsman2022lofi,

title={lo-fi: distributed fine-tuning without communication},

author={Mitchell Wortsman and Suchin Gururangan and Shen Li and Ali Farhadi and Ludwig Schmidt and Michael Rabbat and Ari S. Morcos},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2022},

url={https://openreview.net/forum?id=1U0aPkBVz0},

}

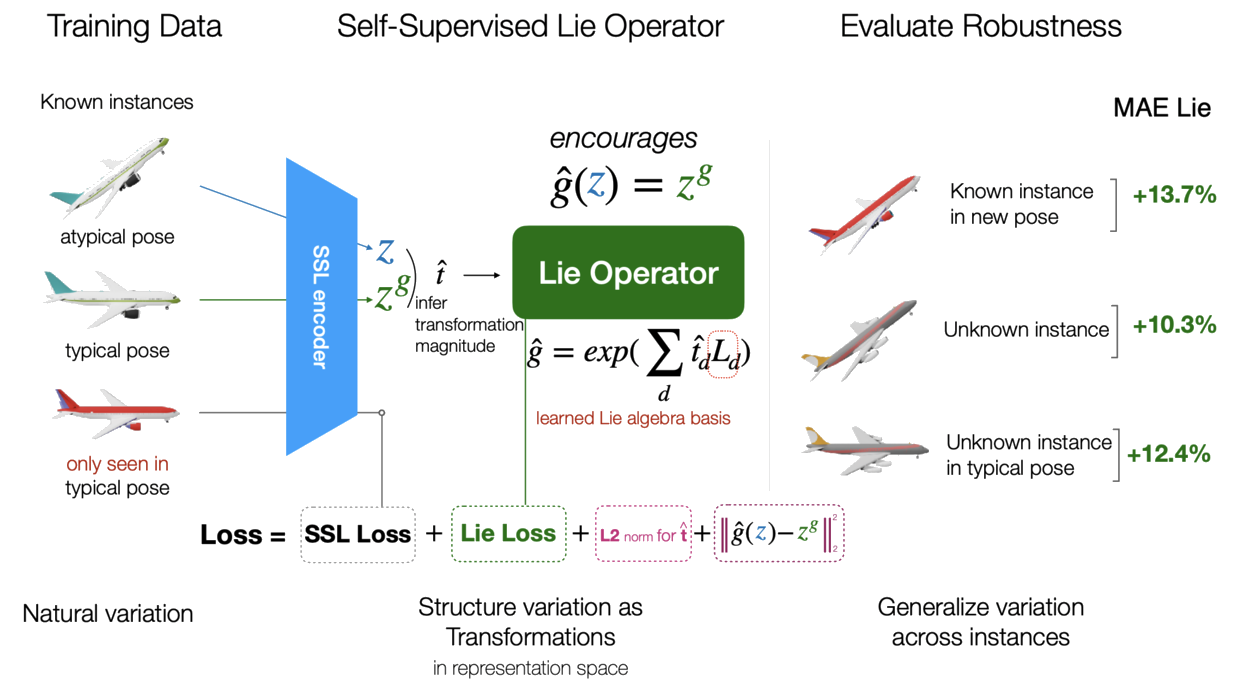

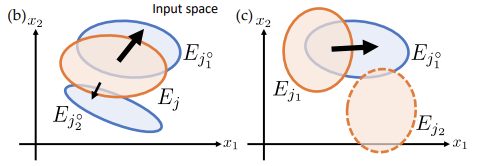

Robust Self-Supervised Learning with Lie Groups

Mark Ibrahim*, Diane Bouchacourt*, and Ari S Morcos

*equal contribution

arXiv, 2022.

PDF arXiv

doi = {10.48550/ARXIV.2210.13356},

url = {https://arxiv.org/abs/2210.13356},

author = {Ibrahim, Mark and Bouchacourt, Diane and Morcos, Ari},

title = {Robust Self-Supervised Learning with Lie Groups},

publisher = {arXiv},

year = {2022},

}

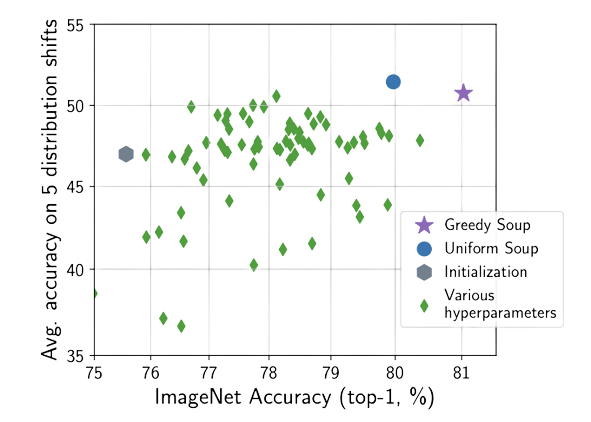

Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time

Mitchell Wortsman, Gabriel Ilharco, Samir Y Gadre, Rebecca Roelofs, Raphael Gontijo-Lopes, Ari S Morcos, Hongseok Namkoong, Ali Farhadi, Yair Carmon, Simon Kornblith, and Ludwig Schmidt

International Conference of Machine Learning (ICML), 2022.

PDF arXiv PMLR Code

title = {Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time},

author = {Wortsman, Mitchell and Ilharco, Gabriel and Gadre, Samir Ya and Roelofs, Rebecca and Gontijo-Lopes, Raphael and Morcos, Ari S and Namkoong, Hongseok and Farhadi, Ali and Carmon, Yair and Kornblith, Simon and Schmidt, Ludwig},

booktitle = {Proceedings of the 39th International Conference on Machine Learning},

pages = {23965--23998},

year = {2022},

editor = {Chaudhuri, Kamalika and Jegelka, Stefanie and Song, Le and Szepesvari, Csaba and Niu, Gang and Sabato, Sivan},

volume = {162},

series = {Proceedings of Machine Learning Research},

month = {17--23 Jul},

publisher = {PMLR},

pdf = {https://proceedings.mlr.press/v162/wortsman22a/wortsman22a.pdf},

url = {https://proceedings.mlr.press/v162/wortsman22a.html},

}

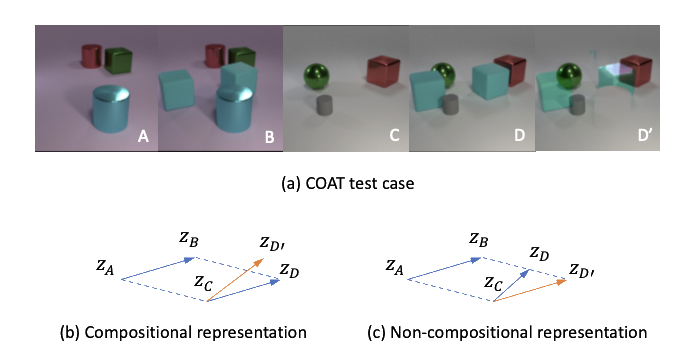

COAT: Measuring Object Compositionality in Emergent Representations

Sirui Xie, Song-Chun Zhu, Ari S Morcos, and Ramakrishna Vedantam

International Conference of Machine Learning (ICML), 2022.

PDF PMLR

title = {COAT: Measuring Object Compositionality in Emergent Representations},

author = {Xie, Sirui and Morcos, Ari S and Zhu, Song-Chun and Vedantam, Ramakrishna},

booktitle = {Proceedings of the 39th International Conference on Machine Learning},

pages = {24388--24413},

year = {2022},

editor = {Chaudhuri, Kamalika and Jegelka, Stefanie and Song, Le and Szepesvari, Csaba and Niu, Gang and Sabato, Sivan},

volume = {162},

series = {Proceedings of Machine Learning Research},

month = {17--23 Jul},

publisher = {PMLR},

pdf = {https://proceedings.mlr.press/v162/xie22b/xie22b.pdf},

url = {https://proceedings.mlr.press/v162/xie22b.html},

}

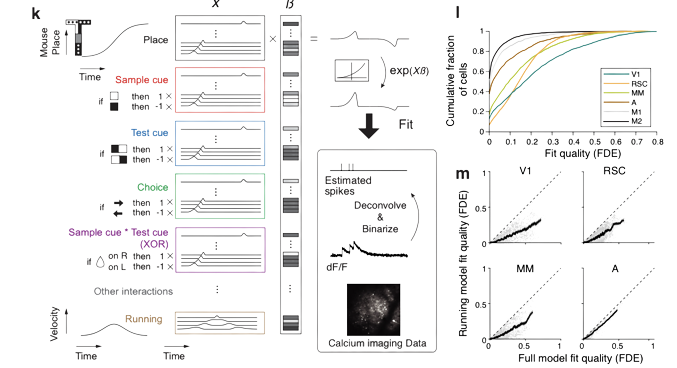

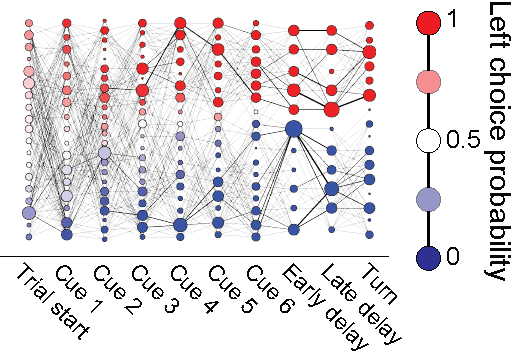

A distributed and efficient population code of mixed selectivity neurons for flexible navigation decisions

Shinichiro Kira, Houmann Safaai, Ari S Morcos, Christopher D Harvey, and Stefano Panzeri

biorXiv, 2021.

PDF bioXiv

author = {Kira, Shinichiro and Safaai, Houman and Morcos, Ari S. and Panzeri, Stefano and Harvey, Christopher D.},

title = {A distributed and efficient population code of mixed selectivity neurons for flexible navigation decisions},

elocation-id = {2022.04.10.487349},

year = {2022},

doi = {10.1101/2022.04.10.487349},

publisher = {Cold Spring Harbor Laboratory},

abstract = {Decision-making requires flexibility to rapidly switch sensorimotor associations depending on behavioral goals stored in memory. We identified cortical areas and neural activity patterns that mediate this flexibility during virtual-navigation, where mice switched navigation toward or away from a visual cue depending on its match to a remembered cue. An optogenetics screen identified V1, posterior parietal cortex (PPC), and retrosplenial cortex (RSC) as necessary for accurate decisions. Calcium imaging revealed neurons that can mediate rapid sensorimotor switching by encoding a conjunction of a current and remembered visual cue that predicted the mouse{\textquoteright}s navigational choice from trial-to-trial. Their activity formed efficient population codes before correct, but not incorrect, choices. These neurons were distributed across posterior cortex, even V1, but were densest in RSC and sparsest in PPC. We propose the flexibility of navigation decisions arises from neurons that mix visual and memory information within a visual-parietal-retrosplenial network, centered in RSC.Competing Interest StatementThe authors have declared no competing interest.},

URL = {https://www.biorxiv.org/content/early/2022/04/10/2022.04.10.487349},

eprint = {https://www.biorxiv.org/content/early/2022/04/10/2022.04.10.487349.full.pdf},

journal = {bioRxiv}

}

2021

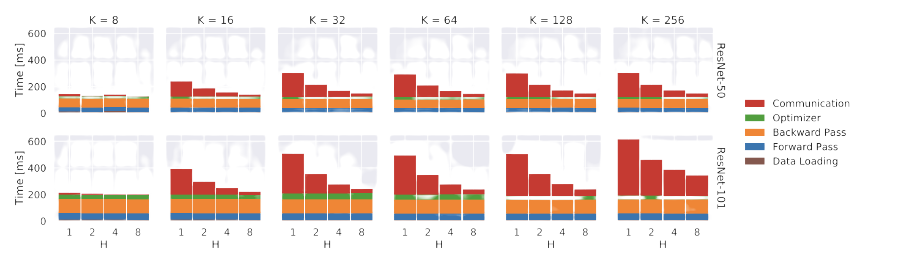

Trade-offs of Local SGD at Scale: An Empirical Study

Jose Javier Gonzalez Ortiz, Jonathan Frankle, Mike Rabbat, Ari S Morcos, and Nicolas Ballas

arXiv, 2021.

PDF arXiv

doi = {10.48550/ARXIV.2110.08133},

url = {https://arxiv.org/abs/2110.08133},

author = {Ortiz, Jose Javier Gonzalez and Frankle, Jonathan and Rabbat, Mike and Morcos, Ari and Ballas, Nicolas},

keywords = {Machine Learning (cs.LG), Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Trade-offs of Local SGD at Scale: An Empirical Study},

publisher = {arXiv},

year = {2021}

}

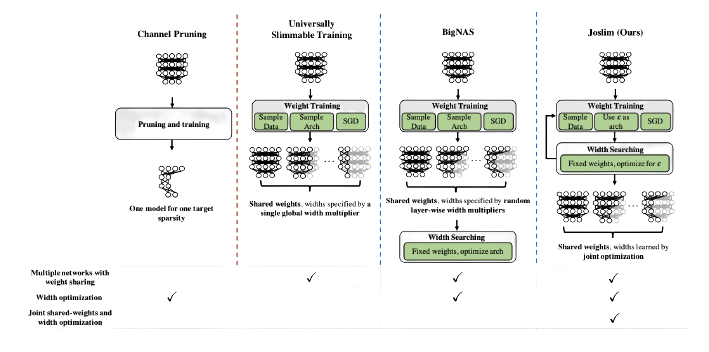

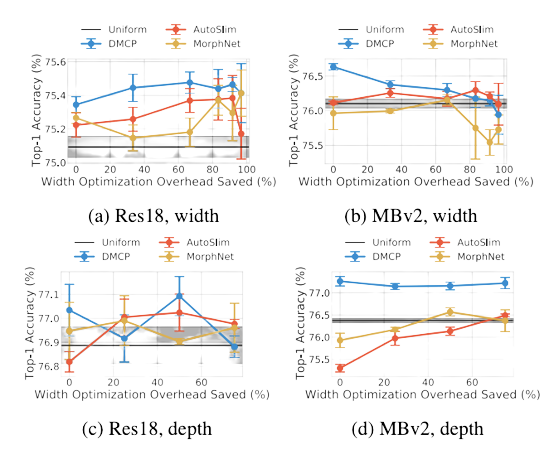

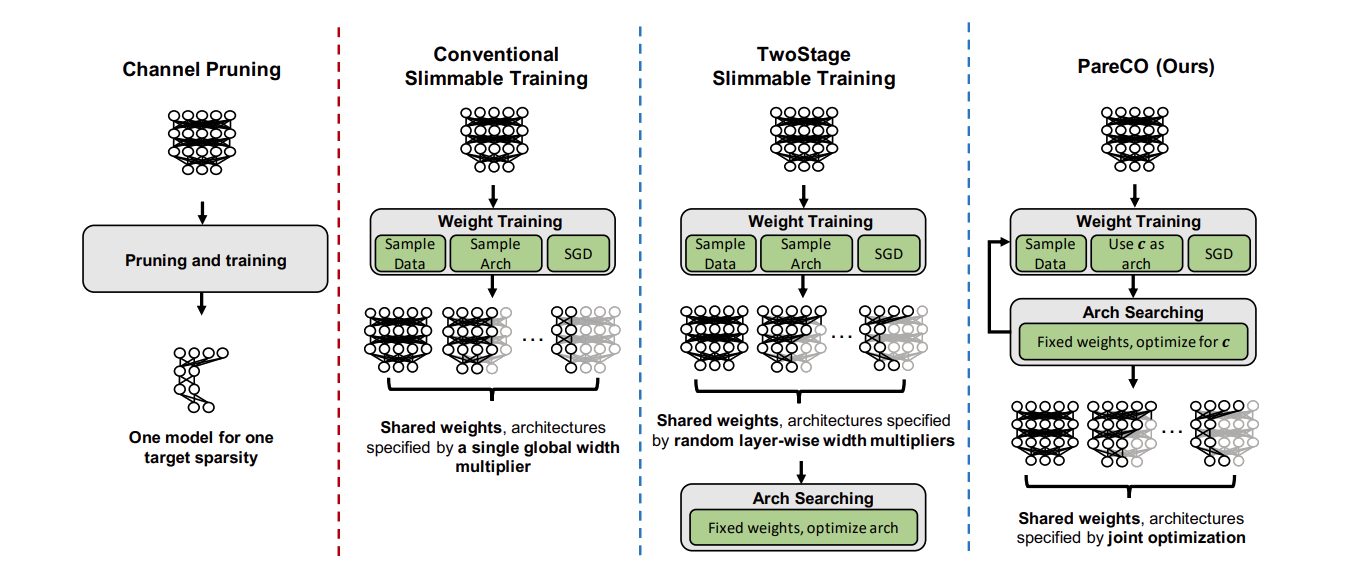

Joslim: Joint Widths and Weights Optimization for Slimmable Neural Networks

Ting-Wu Chin, Ari S. Morcos, and Diana Marculescu

Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 2021.

PDF arXiv ECML Code

title={Joslim: Joint Widths and Weights Optimization for Slimmable Neural Networks},

author={Chin, Ting-Wu and Morcos, Ari S and Marculescu, Diana},

booktitle={Joint European Conference on Machine Learning and Knowledge Discovery in Databases},

year={2021},

organization={Springer}

}

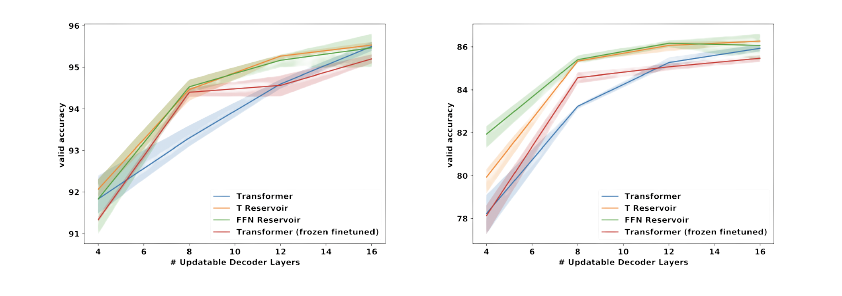

Reservoir Transformers

Sheng Shen, Alexei Baevski, Ari S Morcos, Kurt Keutzer, Michael Auli, and Douwe Kiela

Association of Computational Linguistics (ACL), 2021.

PDF arXiv ACL

title = "Reservoir Transformers",

author = "Shen, Sheng and

Baevski, Alexei and

Morcos, Ari and

Keutzer, Kurt and

Auli, Michael and

Kiela, Douwe",

booktitle = "Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers)",

month = aug,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.acl-long.331",

doi = "10.18653/v1/2021.acl-long.331",

pages = "4294--4309",

abstract = "We demonstrate that transformers obtain impressive performance even when some of the layers are randomly initialized and never updated. Inspired by old and well-established ideas in machine learning, we explore a variety of non-linear {``}reservoir{''} layers interspersed with regular transformer layers, and show improvements in wall-clock compute time until convergence, as well as overall performance, on various machine translation and (masked) language modelling tasks.",

}

Width Transfer: On the (In)variance of Width Optimization

Ting-Wu Chin, Diana Marculescu, and Ari S Morcos

CVPR Workshops, 2021.

PDF arXiv CVF

author = {Chin, Ting-Wu and Marculescu, Diana and Morcos, Ari S.},

title = {Width Transfer: On the (In)variance of Width Optimization},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2021},

pages = {2990-2999}

}

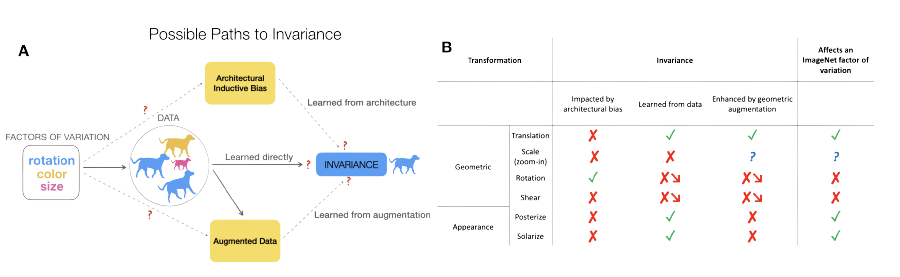

Grounding inductive biases in natural images: invariance stems from variations in data

Diane Bouchacourt, Mark Ibrahim, and Ari S Morcos

Neural Information Processing Systems (NeurIPS), 2021.

PDF arXiv NeurIPS Code

author = {Bouchacourt, Diane and Ibrahim, Mark and Morcos, Ari},

booktitle = {Advances in Neural Information Processing Systems},

editor = {M. Ranzato and A. Beygelzimer and Y. Dauphin and P.S. Liang and J. Wortman Vaughan},

pages = {19566--19579},

publisher = {Curran Associates, Inc.},

title = {Grounding inductive biases in natural images: invariance stems from variations in data},

url = {https://proceedings.neurips.cc/paper/2021/file/a2fe8c05877ec786290dd1450c3385cd-Paper.pdf},

volume = {34},

year = {2021}

}

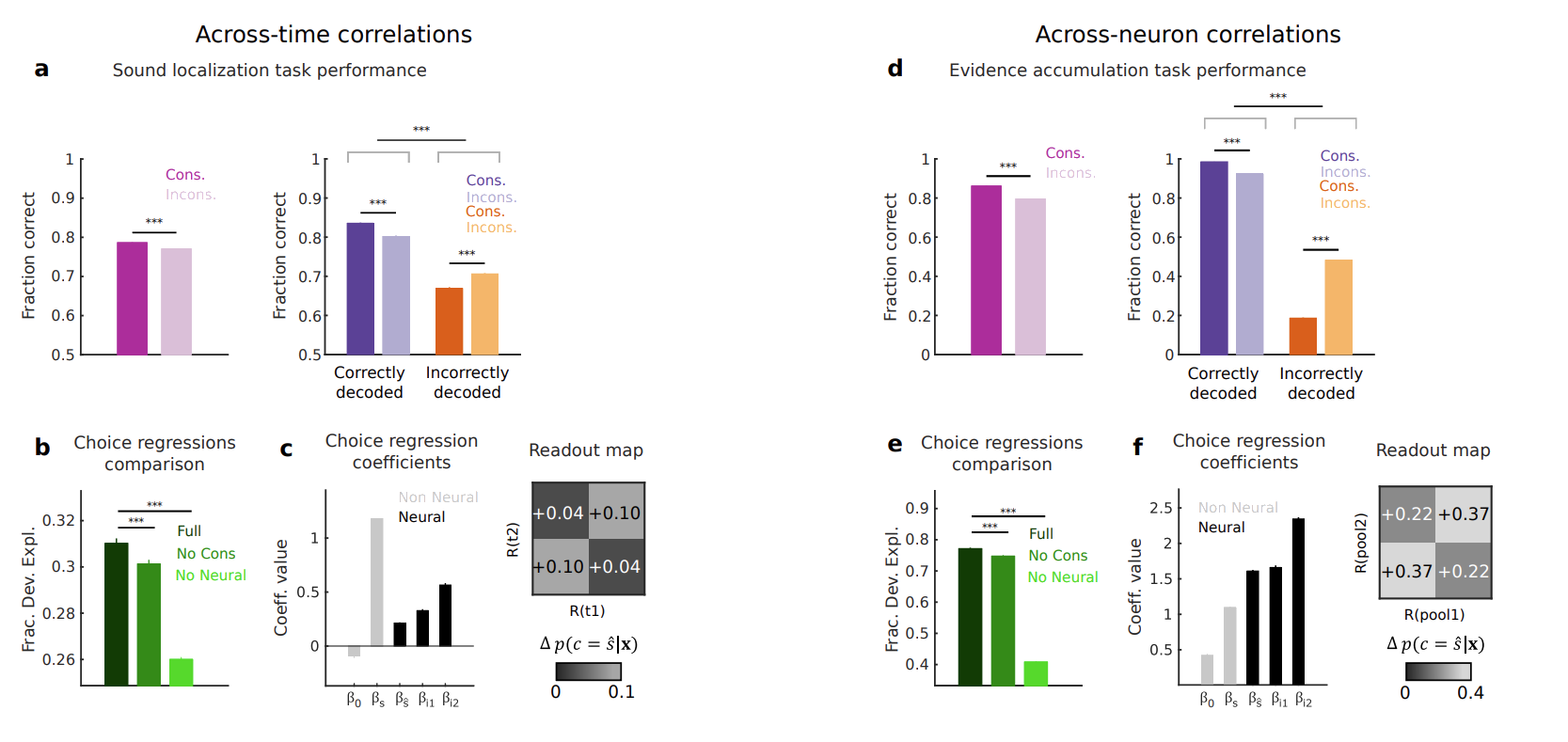

Correlations enhance the behavioral readout of neural population activity in association cortex

Martina Valente, Giuseppe Pica, Giulio Bondanelli, Monica Moroni, Caroline A. Runyan, Ari S Morcos, Christopher D Harvey, and Stefano Panzeri

Nature Neuroscience 24, 975–986 (2021). https://doi.org/10.1038/s41593-021-00845-1

PDF Nature Neuroscience

title={Correlations enhance the behavioral readout of neural population activity in association cortex},

author={Valente, Martina and Pica, Giuseppe and Bondanelli, Giulio and Moroni, Monica and Runyan, Caroline A and Morcos, Ari S and Harvey, Christopher D and Panzeri, Stefano},

journal={Nature neuroscience},

volume={24},

number={7},

pages={975--986},

year={2021},

publisher={Nature Publishing Group}

}

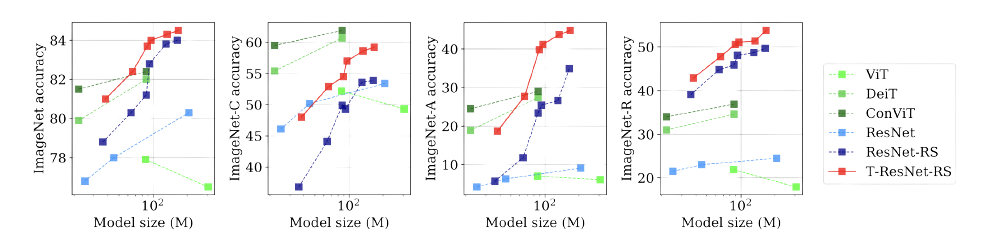

Transformed CNNs: recasting pre-trained convolutional layers with self-attention

Stéphane d'Ascoli, Giulio Biroli, Levent Sagun, and Ari S Morcos

arXiv, 2021.

PDF arXiv Code

title={Transformed CNNs: recasting pre-trained convolutional layers with self-attention},

author={Stéphane d'Ascoli and Levent Sagun and Giulio Biroli and Ari Morcos},

year={2021},

eprint={2106.05795},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

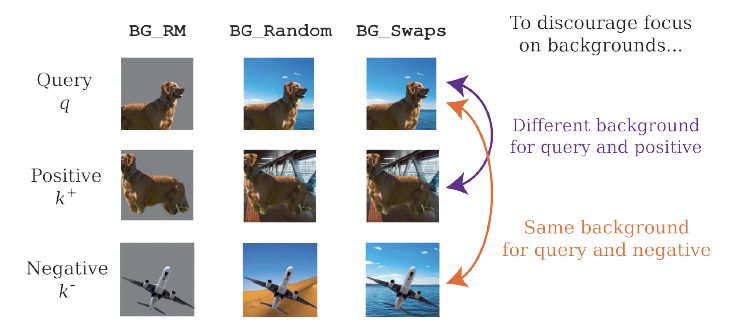

Characterizing and Improving the Robustness of Self-Supervised Learning through Background Augmentations

Chaitanya K. Ryali, David J. Schwab, and Ari S Morcos

arXiv, 2021.

PDF arXiv

doi = {10.48550/ARXIV.2103.12719},

url = {https://arxiv.org/abs/2103.12719},

author = {Ryali, Chaitanya K. and Schwab, David J. and Morcos, Ari S.},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Artificial Intelligence (cs.AI), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Characterizing and Improving the Robustness of Self-Supervised Learning through Background Augmentations},

publisher = {arXiv},

year = {2021}

}

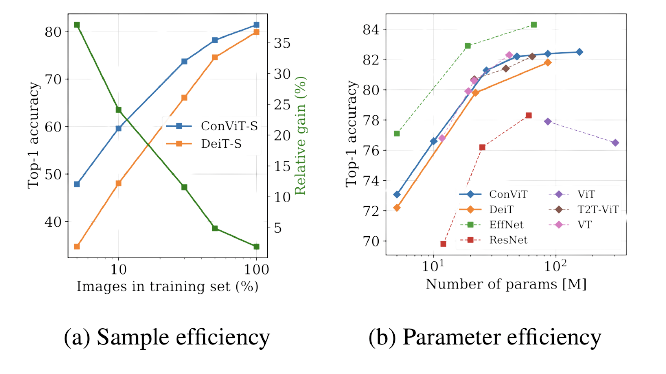

ConViT: Improving Vision Transformers with Soft Convolutional Inductive Biases

Stéphane d'Ascoli, Hugo Touvron, Mathew Leavitt, Ari S Morcos, Giulio Biroli, and Levent Sagun

International Conference of Machine Learning (ICML), 2021.

PDF arXiv PMLR Code

title = {ConViT: Improving Vision Transformers with Soft Convolutional Inductive Biases},

author = {D'Ascoli, St{\'e}phane and Touvron, Hugo and Leavitt, Matthew L and Morcos, Ari S and Biroli, Giulio and Sagun, Levent},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {2286--2296},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/d-ascoli21a/d-ascoli21a.pdf},

url = {https://proceedings.mlr.press/v139/d-ascoli21a.html},

}

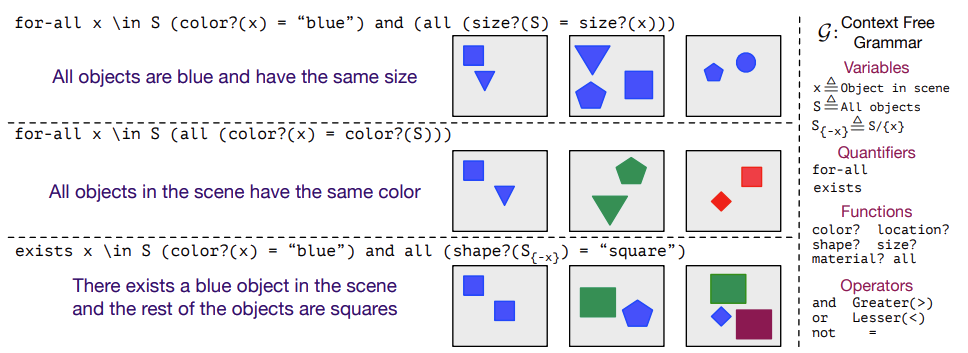

CURI: A Benchmark for Productive Concept Learning Under Uncertainty

Ramakrishna Vedantam, Arthur Szlam, Maximilian Nickel, Ari S Morcos, and Brenden Lake

International Conference of Machine Learning (ICML), 2021.

PDF arXiv PMLR

title = {CURI: A Benchmark for Productive Concept Learning Under Uncertainty},

author = {Vedantam, Ramakrishna and Szlam, Arthur and Nickel, Maximillian and Morcos, Ari and Lake, Brenden M},

booktitle = {Proceedings of the 38th International Conference on Machine Learning},

pages = {10519--10529},

year = {2021},

editor = {Meila, Marina and Zhang, Tong},

volume = {139},

series = {Proceedings of Machine Learning Research},

month = {18--24 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v139/vedantam21a/vedantam21a.pdf},

url = {https://proceedings.mlr.press/v139/vedantam21a.html}

}

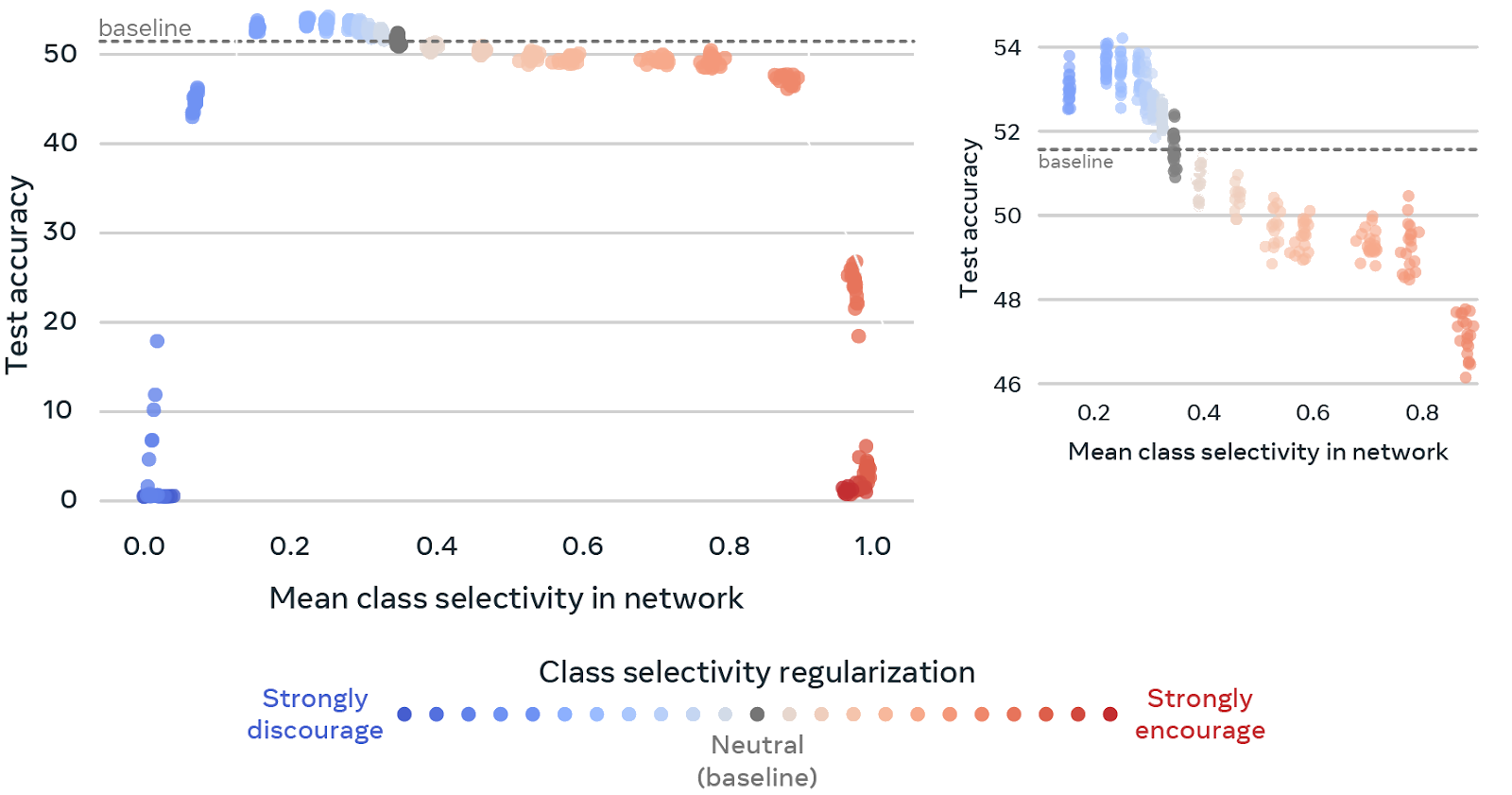

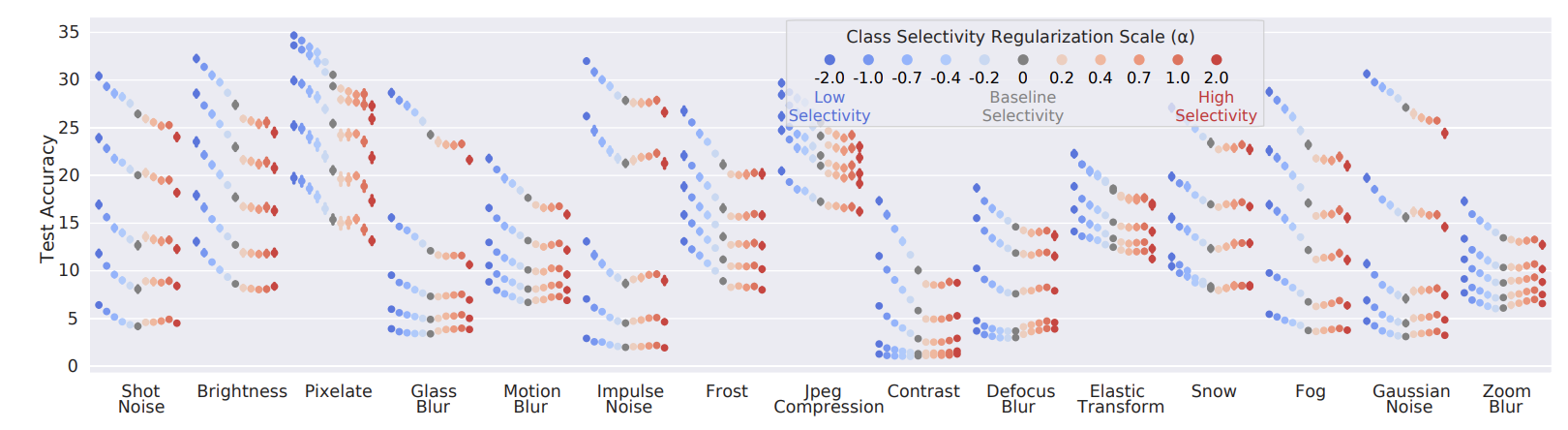

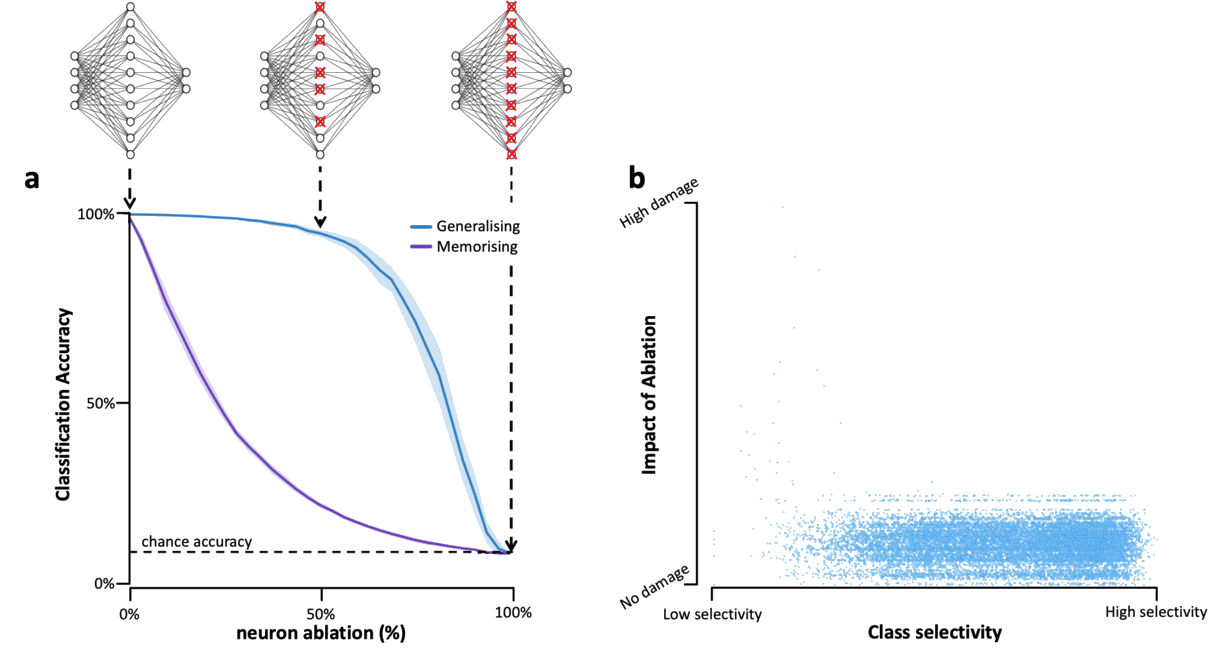

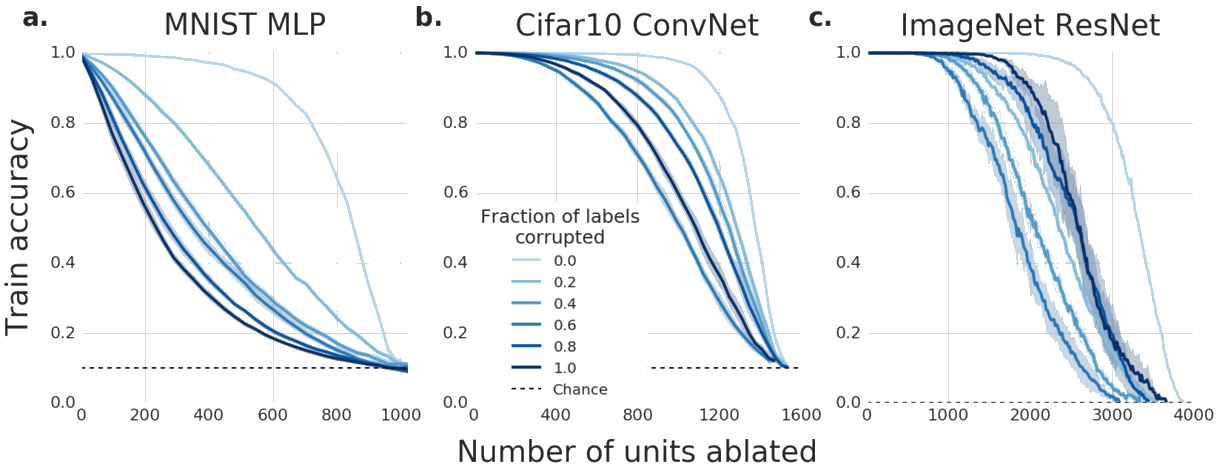

Selectivity considered harmful: evaluating the causal impact of class selectivity in DNNs

Matthew Leavitt and Ari S Morcos

International Conference on Learning Representations (ICLR), 2021.

Also appeared in:

ICML Workshop on Human Interpretability in Machine Learning, 2020.

PDF arXiv OpenReview

title={Selectivity considered harmful: evaluating the causal impact of class selectivity in DNNs},

author={Matthew L. Leavitt and Ari Morcos},

year={2021},

booktitle={International Conference on Learning Representations (ICLR)},

url={https://openreview.net/forum?id=8nl0k08uMi}

}

Facebook AI Blog: Easy-to-interpret neurons may hinder learning in deep neural networks

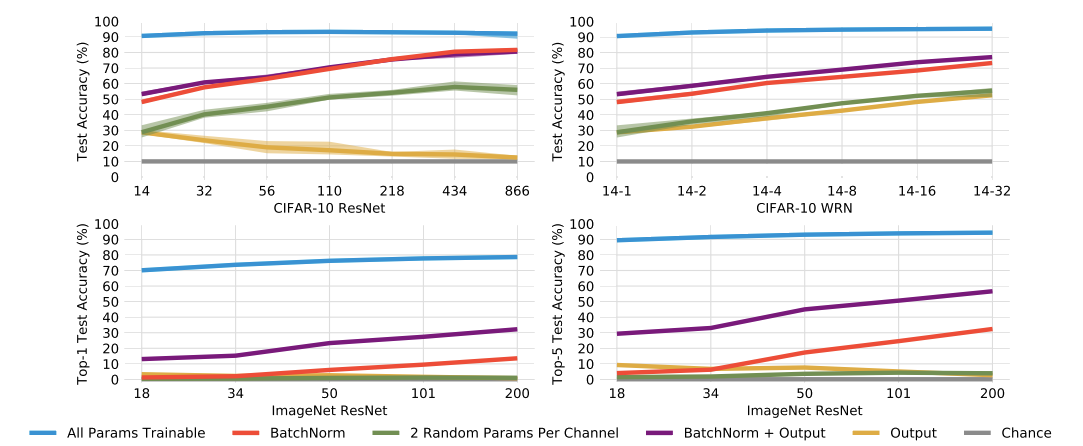

Training BatchNorm and Only BatchNorm: On the Expressive Power of Random Features in CNNs

Jonathan Frankle, David J. Schwab, and Ari S Morcos

International Conference on Learning Representations (ICLR), 2021.

Also appeared in:

NeurIPS Workshop on Science meets Engineering of Deep Learning, 2019.

PDF arXiv OpenReview Code

title={Training BatchNorm and Only BatchNorm: On the Expressive Power of Random Features in CNNs},

author={Jonathan Frankle and David J. Schwab and Ari S. Morcos},

year={2021},

booktitle={International Conference on Learning Representations (ICLR)},

url={https://openreview.net/forum?id=vYeQQ29Tbvx},

}

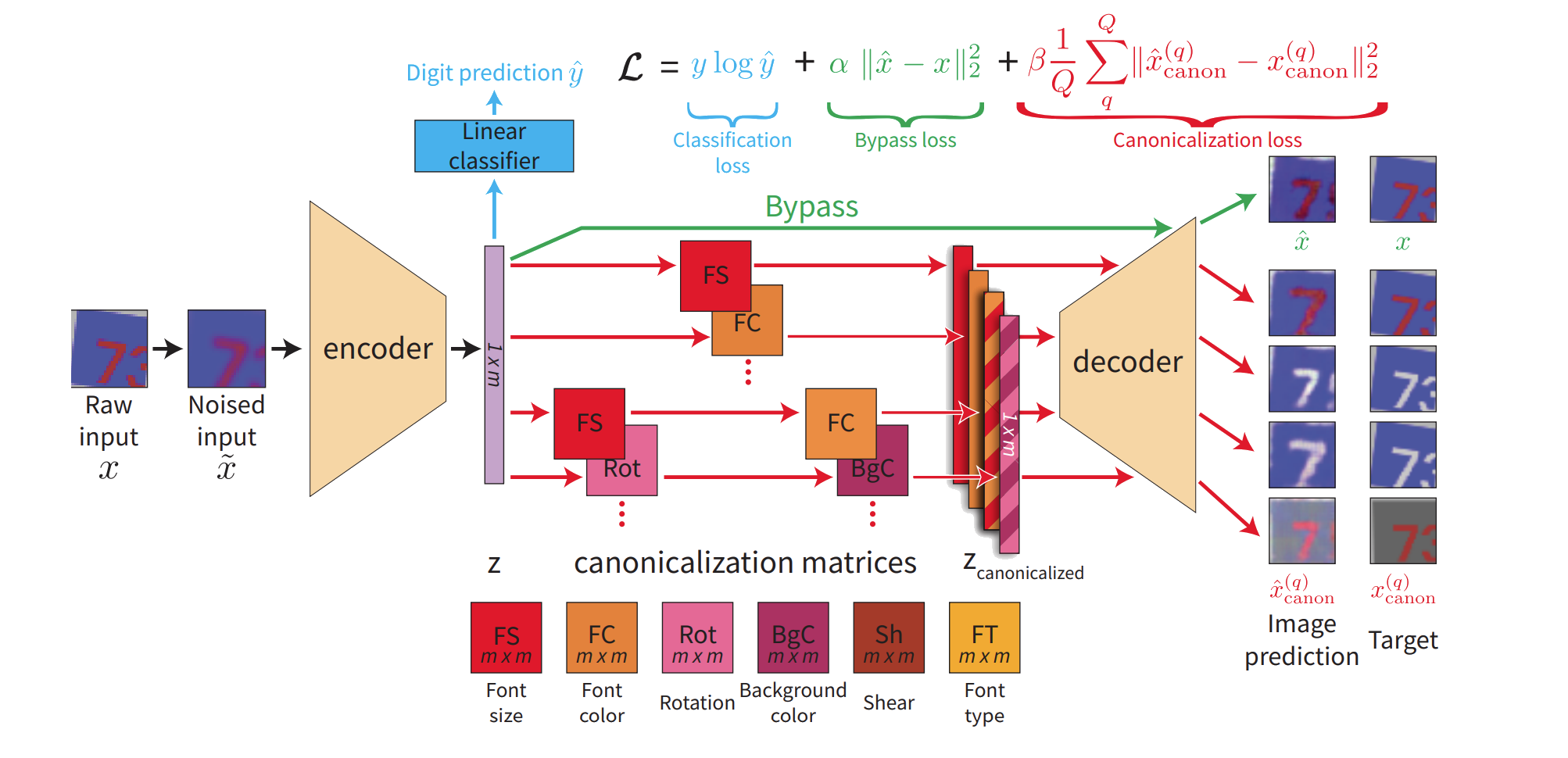

Representation Learning Through Latent Canonicalizations

Or Litany, Ari S Morcos, Srinath Sridhar, Leonidas Guibas, and Judy Hoffman

Winter Conference on Applications of Computer Vision (WACV), 2021.

PDF arXiv

title={Representation Learning Through Latent Canonicalizations},

author={Or Litany and Ari Morcos and Srinath Sridhar and Leonidas Guibas and Judy Hoffman},

year={2021},

booktitle={Winter Conference on Applications of Computer Vision (WACV)},

eprint={2002.11829},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

2020

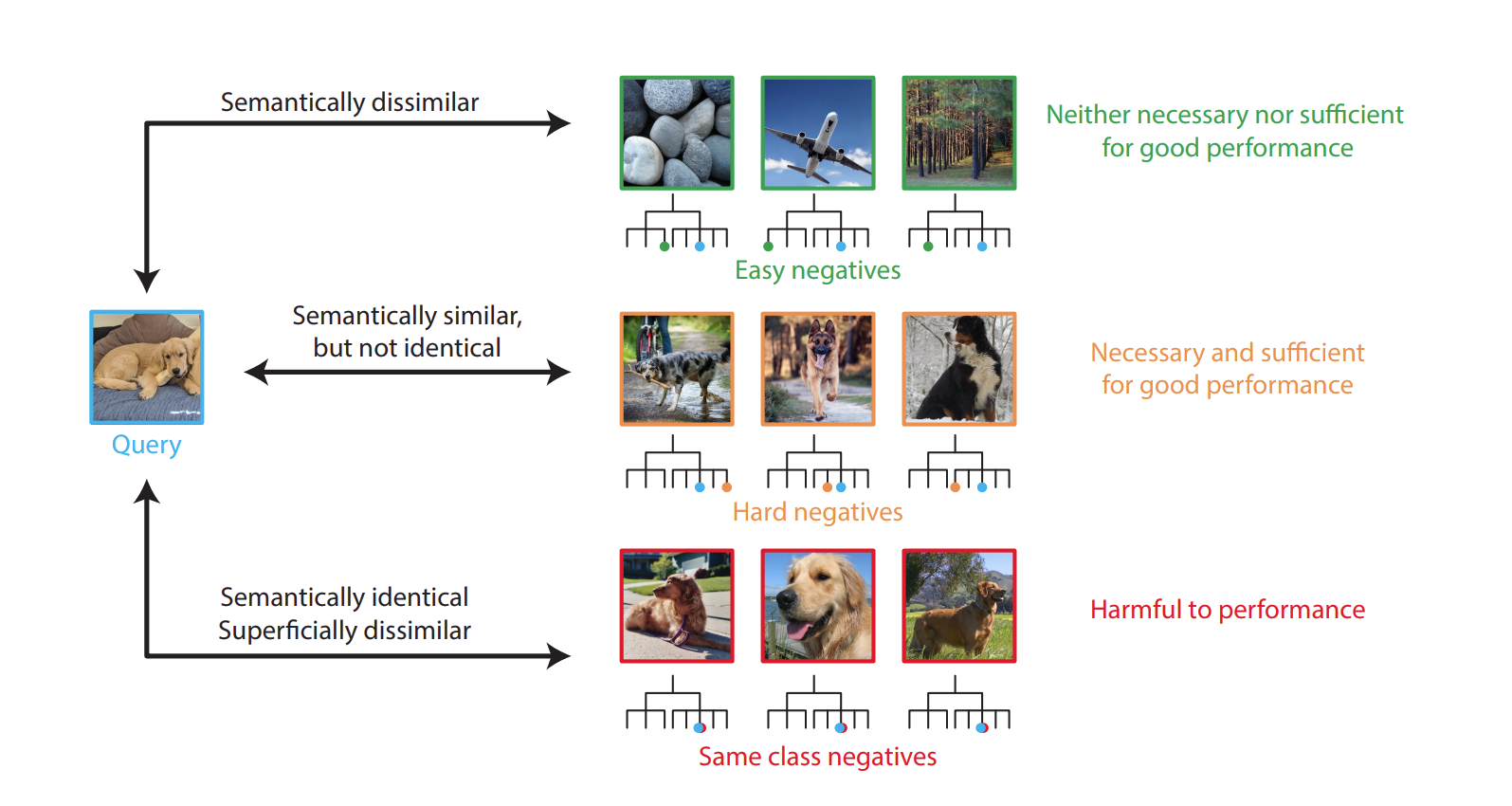

Are all negatives created equal in contrastive instance discrimination?

Tiffany (Tianhui) Cai, Jonathan Frankle, David J. Schwab, and Ari S Morcos

Science Meets Engineering of Deep Learning Workshop, 2020.

PDF arXiv

title={Are all negatives created equal in contrastive instance discrimination?},

author={Tiffany Tianhui Cai and Jonathan Frankle and David J. Schwab and Ari S. Morcos},

year={2020},

eprint={2010.06682},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Towards falsifiable interpretability research

Matthew Leavitt* and Ari S Morcos*

*equal contribution

Workshop on ML-Retrospectives, Surveys & Meta-Analyses @ NeurIPS, 2020.

PDF arXiv

title={Towards falsifiable interpretability research},

author={Matthew L. Leavitt and Ari Morcos},

year={2020},

eprint={2010.12016},

archivePrefix={arXiv},

primaryClass={cs.CY}

}

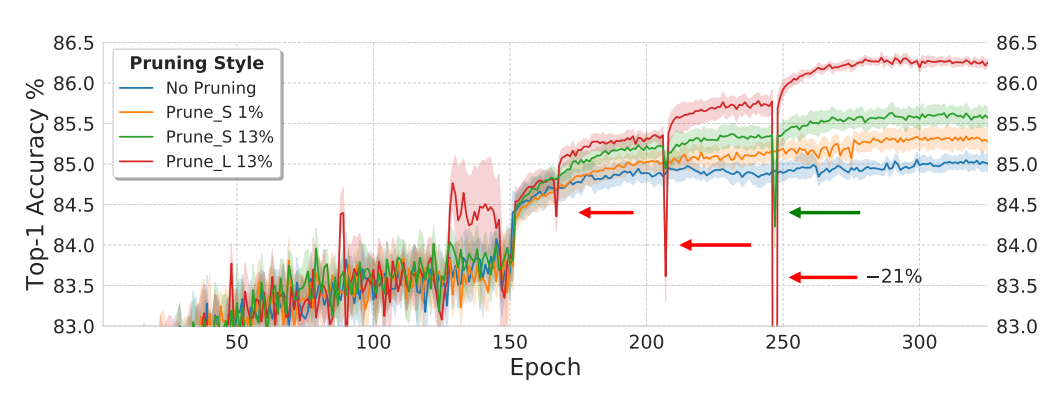

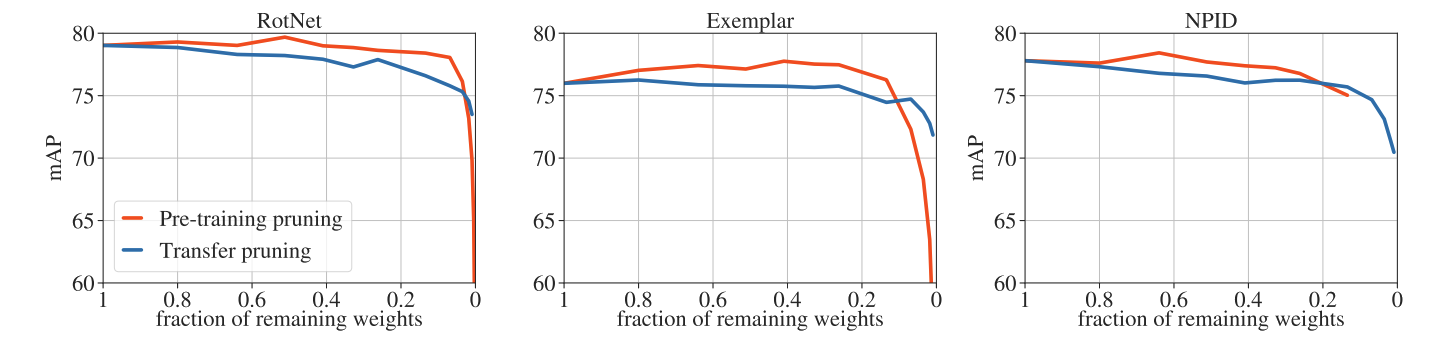

The generalization-stability tradeoff in neural network pruning

Brian R Bartoldson, Ari S Morcos, Adrian Barbu, and Gordon Erlebacher

Neural Information Processing Systems (NeurIPS), 2020.

Also appeared in:

ICML Workshop on Understanding and Improving Generalization in Deep Learning, 2019

NeurIPS Workshop on Science meets Engineering of Deep Learning, 2019.

PDF arXiv Code

title = {The generalization-stability tradeoff in neural network pruning},

author = {Bartoldson, Brian R and Morcos, Ari S and Barbu, Adrian and Erlebacher Gordon},

booktitle = {Advances in Neural Information Processing Systems 33},

year = {2020},

publisher = {Curran Associates, Inc.},

}

Linking average- and worst-case perturbation robustness via class selectivity and dimensionality

Matthew Leavitt and Ari S Morcos

arXiv, 2020.

Also appeared in:

ICML Workshop on Uncertainty and Robustness in Deep Learning, 2020.

PDF arXiv

title={Linking average- and worst-case perturbation robustness via class selectivity and dimensionality},

author={Matthew L. Leavitt and Ari Morcos},

year={2020},

eprint={2010.07693},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Facebook AI Blog: Easy-to-interpret neurons may hinder learning in deep neural networks

PareCO: Pareto-aware Channel Optimization for Slimmable Neural Networks

Ting-Wu (Rudy) Chin, Ari S Morcos, and Diana Marculescu

arXiv, 2020.

Also appeared in:

ICML Workshop on Challenges in Deploying and Monitoring Machine Learning Systems, 2020.

ICML Workshop on Real World Experiment Design and Active Learning, 2020.

KDD Workshop on Adversarial Learning Methods for Machine Learning and Data Mining, 2020.

KDD Workshop on Deep Learning Practice for High-Dimensional Sparse Data, 2020.

PDF arXiv

title={PareCO: Pareto-aware Channel Optimization for Slimmable Neural Networks},

author={Ting-Wu Chin and Ari S. Morcos and Diana Marculescu},

year={2020},

eprint={2007.11752},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

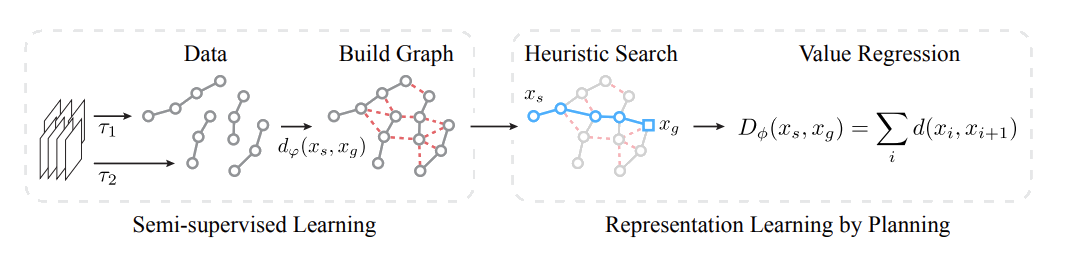

Plan2Vec: Unsupervised Representation Learning by Latent Plans

Ge Yang, Amy Zhang, Ari S Morcos, Joelle Pineau, Pieter Abbeel, Devi Parikh, and Roberto Calandra

Learning for Dynamics and Control, 2020.

PDF arXiv OpenReview PMLR

title = {Plan2Vec: Unsupervised Representation Learning by Latent Plans},

author = {Yang, Ge and Zhang, Amy and Morcos, Ari and Pineau, Joelle and Abbeel, Pieter and Calandra, Roberto},

pages = {935--946},

year = {2020},

editor = {Alexandre M. Bayen and Ali Jadbabaie and George Pappas and Pablo A. Parrilo and Benjamin Recht and Claire Tomlin and Melanie Zeilinger},

volume = {120},

series = {Proceedings of Machine Learning Research},

address = {The Cloud},

month = {10--11 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v120/yang20b/yang20b.pdf},

url = {http://proceedings.mlr.press/v120/yang20b.html},

abstract = {In this paper, we introducePlan2Vec, an model-based method to learn state representation fromsequences of off-policy observation data via planning. In contrast to prior methods, plan2vec doesnot require grounding via expert trajectories or actions, opening it up to many unsupervised learningscenarios. When applied to control, plan2vec learns a representation that amortizes the planningcost, enabling test time planning complexity that is linear in planning depth rather than exhaustiveover the entire state space. We demonstrate the effectiveness of Plan2Vec on one simulated andtwo real-world image datasets, showing that Plan2Vec can effectively acquire representations thatcarry long-range structure to accelerate planning. Additional results and videos can be found athttps://sites.google.com/view/plan2vec}

}

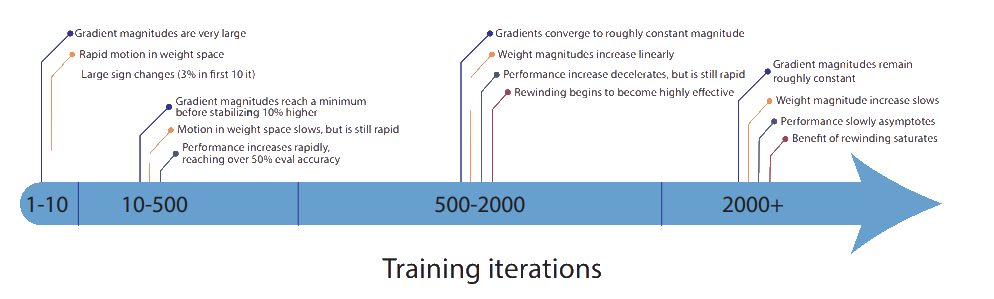

The early phase of neural network training

Jonathan Frankle, David J. Schwab, and Ari S Morcos

International Conference on Learning Representations (ICLR), 2020.

PDF arXiv OpenReview Code

frankle_early_phase_iclr_2020,

title={The Early Phase of Neural Network Training},

author={Jonathan Frankle and David J. Schwab and Ari S. Morcos},

booktitle={International Conference on Learning Representations (ICLR)},

year={2020},

url={https://openreview.net/forum?id=Hkl1iRNFwS}

}

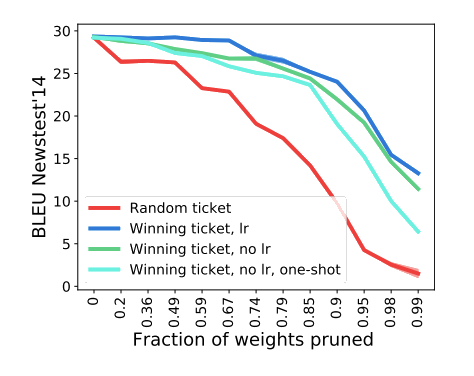

Playing the lottery with rewards and multiple languages: lottery tickets in RL and NLP

Haonan Yu, Sergey Edunov, Yuandong Tian, and Ari S Morcos

International Conference on Learning Representations (ICLR), 2020.

PDF arXiv OpenReview Talk

yu_lottery_tickets_rl_npl_iclr_2020,

title={Playing the lottery with rewards and multiple languages: lottery tickets in RL and NLP},

author={Haonan Yu and Sergey Edunov and Yuandong Tian and Ari S. Morcos},

booktitle={International Conference on Learning Representations (ICLR)},

year={2020},

url={https://openreview.net/forum?id=S1xnXRVFwH}

}

Facebook AI Blog: Understanding the generalization of lottery tickets in neural networks

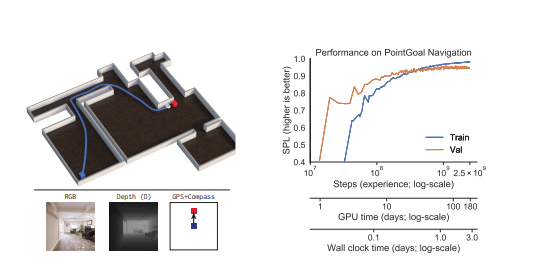

DD-PPO: Learning near-perfect PointGoal navigators from 2.5 billion frames

Erik Wijmans, Abhishek Kadian, Ari S Morcos, Stefan Lee, Irfan Essa, Devi Parikh, Manolis Savva, and Dhruv Batra

International Conference on Learning Representations (ICLR), 2020.

PDF arXiv OpenReview Code Video

wijmans_dd_ppo_iclr_2020,

title={DD-PPO: Learning Near-Perfect PointGoal Navigators from 2.5 Billion Frames},

author={Erik Wijmans and Abhishek Kadian and Ari Morcos and Stefan Lee and Irfan Essa and Devi Parikh and Manolis Savva and Dhruv Batra},

booktitle={International Conference on Learning Representations (ICLR)},

year={2020},

url={https://openreview.net/forum?id=H1gX8C4YPr}

}

Analyzing Visual Representations in Embodied Navigation Tasks

Erik Wijmans, Julian Straub, Dhruv Batra, Irfan Essa, Judy Hoffman, and Ari S Morcos

arXiv, 2020.

PDF arXiv

title={Analyzing Visual Representations in Embodied Navigation Tasks},

author={Erik Wijmans and Julian Straub and Dhruv Batra and Irfan Essa and Judy Hoffman and Ari Morcos},

year={2020},

eprint={2003.05993},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Pruning Convolutional Neural Networks with Self-Supervision

Mathilde Caron, Ari S Morcos, Piotr Bojanowski, Julien Mairal, and Armand Joulin

arXiv, 2020.

PDF arXiv

title={Pruning Convolutional Neural Networks with Self-Supervision},

author={Mathilde Caron and Ari Morcos and Piotr Bojanowski and Julien Mairal and Armand Joulin},

year={2020},

eprint={2001.03554},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

2019

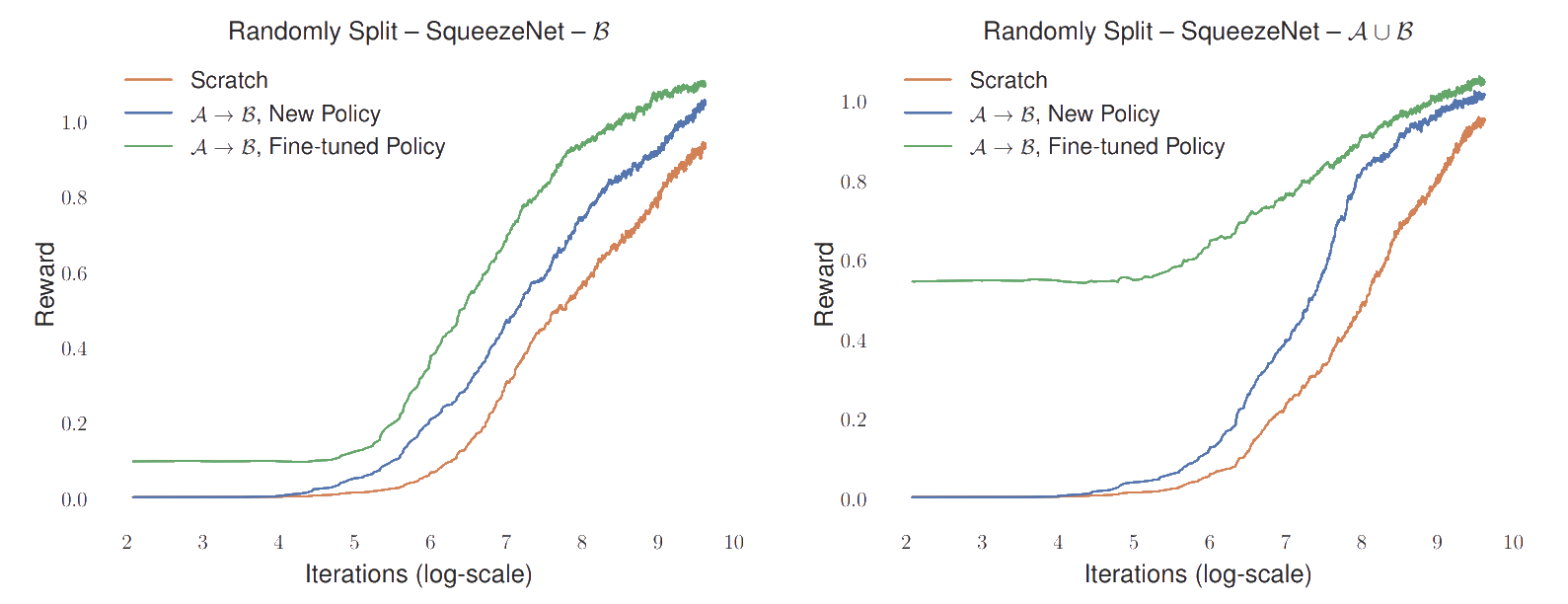

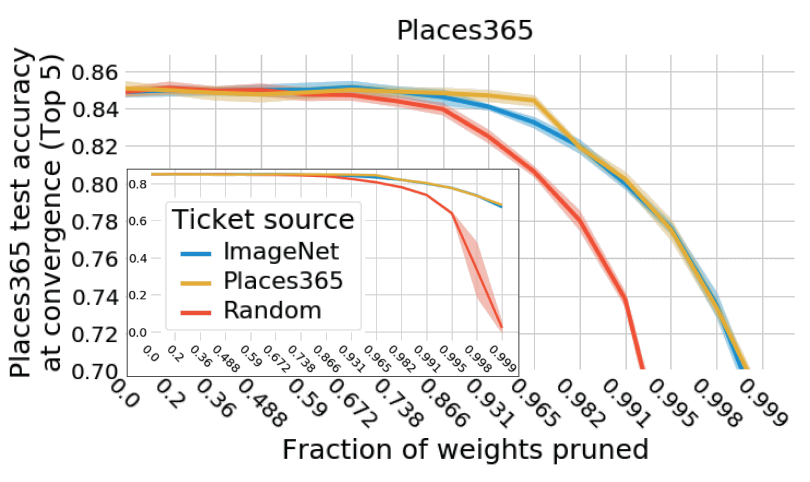

One ticket to win them all: generalizing lottery ticket initializations across datasets and optimizers

Ari S Morcos, Haonan Yu, Michela Paganini, and Yuandong Tian

Neural Information Processing Systems (NeurIPS), 2019.

PDF arXiv NeurIPS Talk

title = {One ticket to win them all: generalizing lottery ticket initializations across datasets and optimizers},

author = {Morcos, Ari and Yu, Haonan and Paganini, Michela and Tian, Yuandong},

booktitle = {Advances in Neural Information Processing Systems 32},

editor = {H. Wallach and H. Larochelle and A. Beygelzimer and F. d\textquotesingle Alch\'{e}-Buc and E. Fox and R. Garnett},

pages = {4932--4942},

year = {2019},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/8739-one-ticket-to-win-them-all-generalizing-lottery-ticket-initializations-across-datasets-and-optimizers.pdf}

}

Facebook AI Blog: Understanding the generalization of lottery tickets in neural networks

Luck Matters: Understanding Training Dynamics of Deep ReLU Networks

Yuandong Tian, Tina Jiang, Qucheng Gong, and Ari S Morcos

arXiv, 2019.

Also appeared in:

ICML Workshop on Understanding and Improving Generalization in Deep Learning, 2019

PDF arXiv Poster

title={Luck Matters: Understanding Training Dynamics of Deep ReLU Networks},

author={Yuandong Tian and Tina Jiang and Qucheng Gong and Ari Morcos},

year={2019},

eprint={1905.13405},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Facebook AI Blog: Understanding the generalization of lottery tickets in neural networks

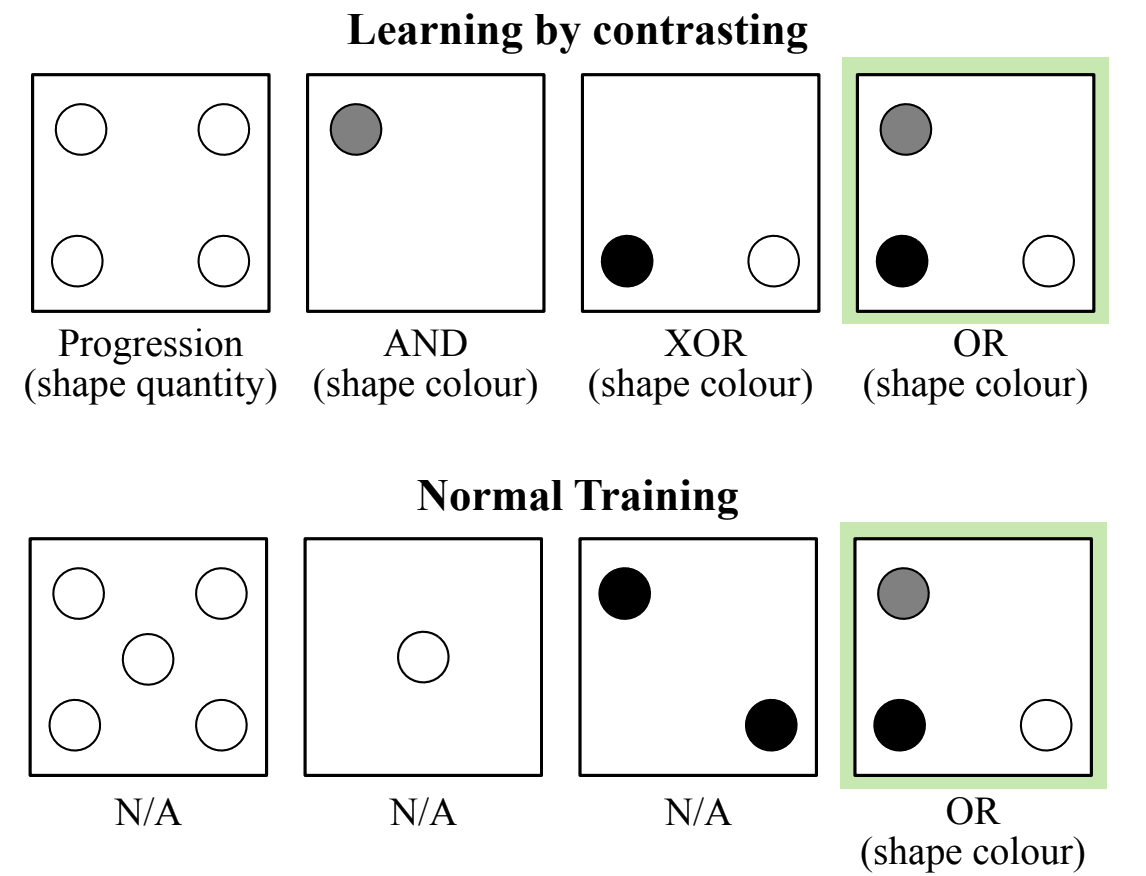

Learning to make analogies by contrasting abstract relational structure

Felix Hill*, Adam Santoro*, David GT Barrett, Ari S Morcos, and Timothy Lillicrap

*equal contribution, alphabetical order

International Conference on Representation Learning (ICLR), 2019.

PDF arXiv OpenReview

hill2018learning,

title={Learning to Make Analogies by Contrasting Abstract Relational Structure},

author={Felix Hill and Adam Santoro and David Barrett and Ari Morcos and Timothy Lillicrap},

booktitle={International Conference on Learning Representations},

year={2019},

url={https://openreview.net/forum?id=SylLYsCcFm},

}

Analyzing biological and artificial neural networks: challenges with opportunities for synergy?

David GT Barrett*, Ari S Morcos*, and Jakob H Macke

*equal contribution, alphabetical order

Current Opinion in Neurobiology, vol. 55, pp 55-64, 2019. doi: https://doi.org/10.1016/j.conb.2019.01.007.

PDF Current Opinion arXiv

title = "Analyzing biological and artificial neural networks: challenges with opportunities for synergy?",

journal = "Current Opinion in Neurobiology",

volume = "55",

pages = "55 - 64",

year = "2019",

note = "Machine Learning, Big Data, and Neuroscience",

issn = "0959-4388",

doi = "https://doi.org/10.1016/j.conb.2019.01.007",

url = "http://www.sciencedirect.com/science/article/pii/S0959438818301569",

author = "David GT Barrett and Ari S Morcos and Jakob H Macke",

abstract = "Deep neural networks (DNNs) transform stimuli across multiple processing stages to produce representations that can be used to solve complex tasks, such as object recognition in images. However, a full understanding of how they achieve this remains elusive. The complexity of biological neural networks substantially exceeds the complexity of DNNs, making it even more challenging to understand the representations they learn. Thus, both machine learning and computational neuroscience are faced with a shared challenge: how can we analyze their representations in order to understand how they solve complex tasks? We review how data-analysis concepts and techniques developed by computational neuroscientists can be useful for analyzing representations in DNNs, and in turn, how recently developed techniques for analysis of DNNs can be useful for understanding representations in biological neural networks. We explore opportunities for synergy between the two fields, such as the use of DNNs as in silico model systems for neuroscience, and how this synergy can lead to new hypotheses about the operating principles of biological neural networks."

}

2018

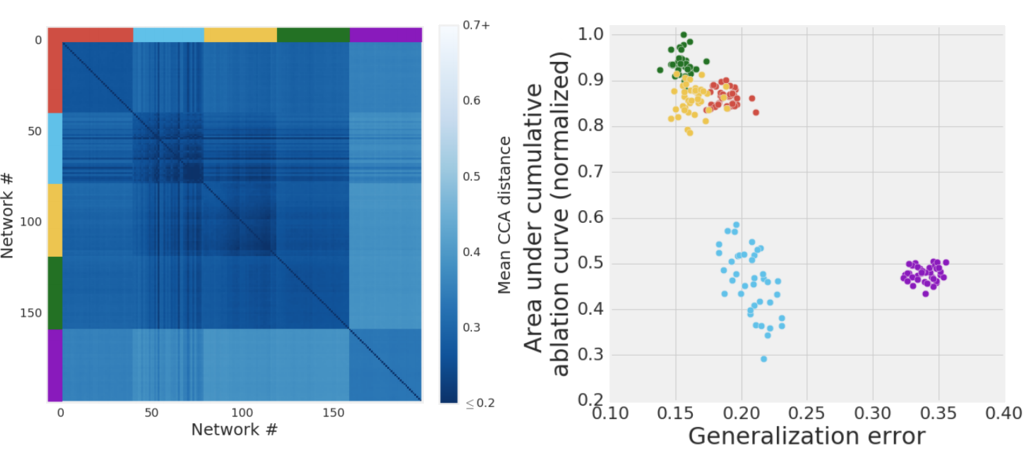

Insights on representational similarity in neural networks with canonical correlation

Ari S Morcos*, Maithra Raghu*, and Samy Bengio

*equal contribution, alphabetical order

Neural Information Processing Systems (NeurIPS), 2018.

PDF arXiv NeurIPS Poster Code

title = {Insights on representational similarity in neural networks with canonical correlation},

author = {Morcos, Ari and Raghu, Maithra and Bengio, Samy},

booktitle = {Advances in Neural Information Processing Systems 31},

editor = {S. Bengio and H. Wallach and H. Larochelle and K. Grauman and N. Cesa-Bianchi and R. Garnett},

pages = {5727--5736},

year = {2018},

publisher = {Curran Associates, Inc.},

url = {http://papers.nips.cc/paper/7815-insights-on-representational-similarity-in-neural-networks-with-canonical-correlation.pdf}

}

Google AI Blog: How Can Neural Network Similarity Help Us Understand Training and Generalization?

Human-level performance in first-person multiplayer games with population-based deep reinforcement learning

Max Jaderberg*, Wojciech M. Czarnecki*, Iain Dunning*, Luke Marris, Guy Lever, Antonio Garcia Castaneda, Charles Beattie, Neil C Rabinowitz, Ari S Morcos, Avraham Ruderman, Nicolas Sonnerat, Tim Green, Louise Deason, Joel Z Leibo, David Silver, Demis Hassabis, Koray Kavukcuoglu, and Thore Graepel.

*equal contribution

Science 31 May 2019, Vol. 364, Issue 6443, pp. 859-865, doi: 10.1126/science.aau6249

PDF arXiv Science

author = {Jaderberg, Max and Czarnecki, Wojciech M. and Dunning, Iain and Marris, Luke and Lever, Guy and Casta{\~n}eda, Antonio Garcia and Beattie, Charles and Rabinowitz, Neil C. and Morcos, Ari S. and Ruderman, Avraham and Sonnerat, Nicolas and Green, Tim and Deason, Louise and Leibo, Joel Z. and Silver, David and Hassabis, Demis and Kavukcuoglu, Koray and Graepel, Thore},

title = {Human-level performance in 3D multiplayer games with population-based reinforcement learning},

volume = {364},

number = {6443},

pages = {859--865},

year = {2019},

doi = {10.1126/science.aau6249},

publisher = {American Association for the Advancement of Science},

abstract = {Artificially intelligent agents are getting better and better at two-player games, but most real-world endeavors require teamwork. Jaderberg et al. designed a computer program that excels at playing the video game Quake III Arena in Capture the Flag mode, where two multiplayer teams compete in capturing the flags of the opposing team. The agents were trained by playing thousands of games, gradually learning successful strategies not unlike those favored by their human counterparts. Computer agents competed successfully against humans even when their reaction times were slowed to match those of humans.Science, this issue p. 859Reinforcement learning (RL) has shown great success in increasingly complex single-agent environments and two-player turn-based games. However, the real world contains multiple agents, each learning and acting independently to cooperate and compete with other agents. We used a tournament-style evaluation to demonstrate that an agent can achieve human-level performance in a three-dimensional multiplayer first-person video game, Quake III Arena in Capture the Flag mode, using only pixels and game points scored as input. We used a two-tier optimization process in which a population of independent RL agents are trained concurrently from thousands of parallel matches on randomly generated environments. Each agent learns its own internal reward signal and rich representation of the world. These results indicate the great potential of multiagent reinforcement learning for artificial intelligence research.},

issn = {0036-8075},

URL = {https://science.sciencemag.org/content/364/6443/859},

eprint = {https://science.sciencemag.org/content/364/6443/859.full.pdf},

journal = {Science}

}

DeepMind Blog: Capture the Flag: the emergence of complex cooperative agents

Engadget MIT Technology Review Venture Beat Gamasutra Fortune The Verge The Telegraph Business Insider Two Minute Papers

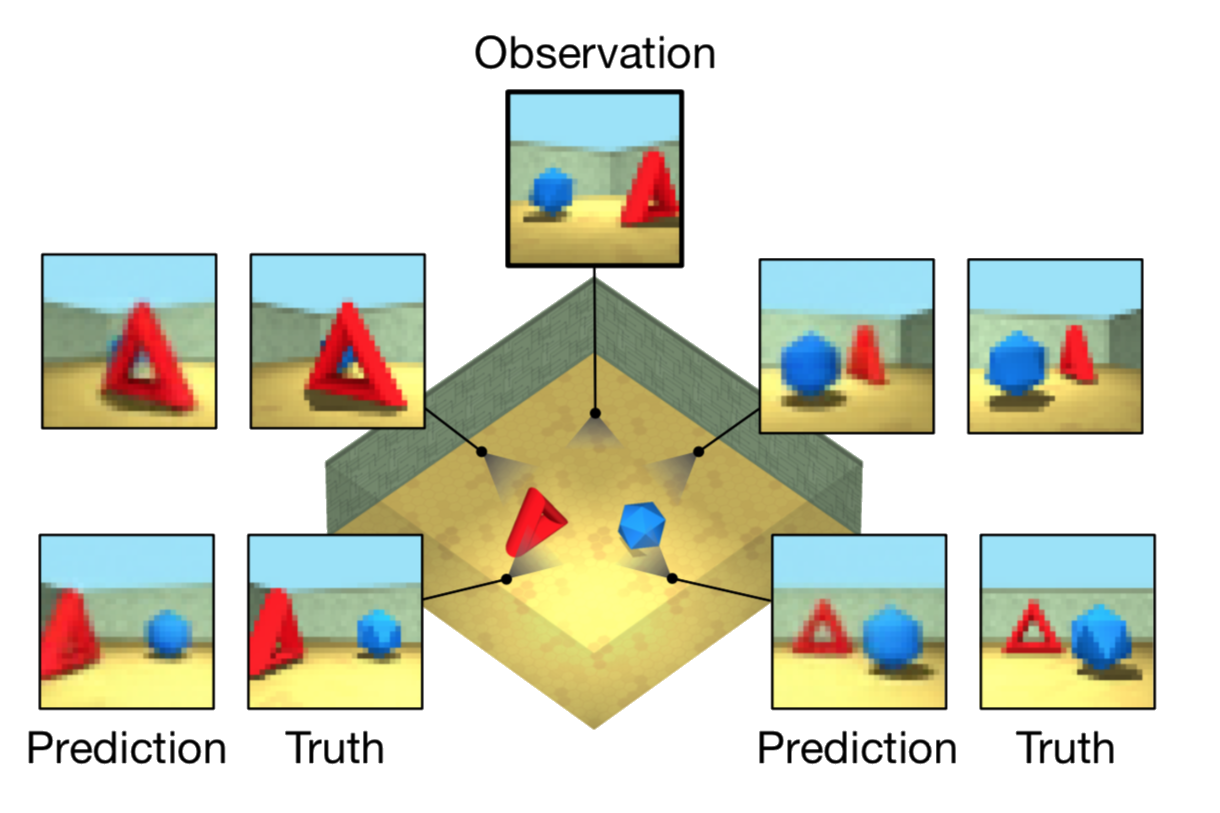

Neural scene representation and rendering

SM Ali Eslami*, Danilo J Rezende*, Frederic Besse, Fabio Viola, Ari S Morcos, Marta Garnelo, Avraham Ruderman, Andrei A. Rusu, Ivo Danihelka, Karol Gregor, David P. Reichert, Lars Beusing, Theophane Weber, Oriol Vinyals, Dan Rosenbaum, Neil C Rabinowitz, Helen King, Chloe Hillier, Matthew Botvinick, Daan Wierstra, Koray Kavukcuoglu, and Demis Hassabis

*equal contribution

Author contribution: A.S.M. designed and performed analysis experiments.

Science 15 Jun 2018, Vol. 360, Issue 6394, pp. 1204-1210, doi: 10.1126/science.aar6170

PDF Science

author = {Eslami, S. M. Ali and Jimenez Rezende, Danilo and Besse, Frederic and Viola, Fabio and Morcos, Ari S. and Garnelo, Marta and Ruderman, Avraham and Rusu, Andrei A. and Danihelka, Ivo and Gregor, Karol and Reichert, David P. and Buesing, Lars and Weber, Theophane and Vinyals, Oriol and Rosenbaum, Dan and Rabinowitz, Neil and King, Helen and Hillier, Chloe and Botvinick, Matt and Wierstra, Daan and Kavukcuoglu, Koray and Hassabis, Demis},

title = {Neural scene representation and rendering},

volume = {360},

number = {6394},

pages = {1204--1210},

year = {2018},

doi = {10.1126/science.aar6170},

publisher = {American Association for the Advancement of Science},

abstract = {To train a computer to {\textquotedblleft}recognize{\textquotedblright} elements of a scene supplied by its visual sensors, computer scientists typically use millions of images painstakingly labeled by humans. Eslami et al. developed an artificial vision system, dubbed the Generative Query Network (GQN), that has no need for such labeled data. Instead, the GQN first uses images taken from different viewpoints and creates an abstract description of the scene, learning its essentials. Next, on the basis of this representation, the network predicts what the scene would look like from a new, arbitrary viewpoint.Science, this issue p. 1204Scene representation{\textemdash}the process of converting visual sensory data into concise descriptions{\textemdash}is a requirement for intelligent behavior. Recent work has shown that neural networks excel at this task when provided with large, labeled datasets. However, removing the reliance on human labeling remains an important open problem. To this end, we introduce the Generative Query Network (GQN), a framework within which machines learn to represent scenes using only their own sensors. The GQN takes as input images of a scene taken from different viewpoints, constructs an internal representation, and uses this representation to predict the appearance of that scene from previously unobserved viewpoints. The GQN demonstrates representation learning without human labels or domain knowledge, paving the way toward machines that autonomously learn to understand the world around them.},

issn = {0036-8075},

URL = {https://science.sciencemag.org/content/360/6394/1204},

eprint = {https://science.sciencemag.org/content/360/6394/1204.full.pdf},

journal = {Science}

}

DeepMind Blog: Neural scene representation and rendering

Financial Times Scientific American MIT Technology Review New Scientist Engadget Ars Technica Venture Beat Inverse Axios ABC News IEEE Spectrum TechCrunch Beebom BGR Hot Hardware TechXplore TechTimes EurekAlert! Daily Mail Two Minute Papers

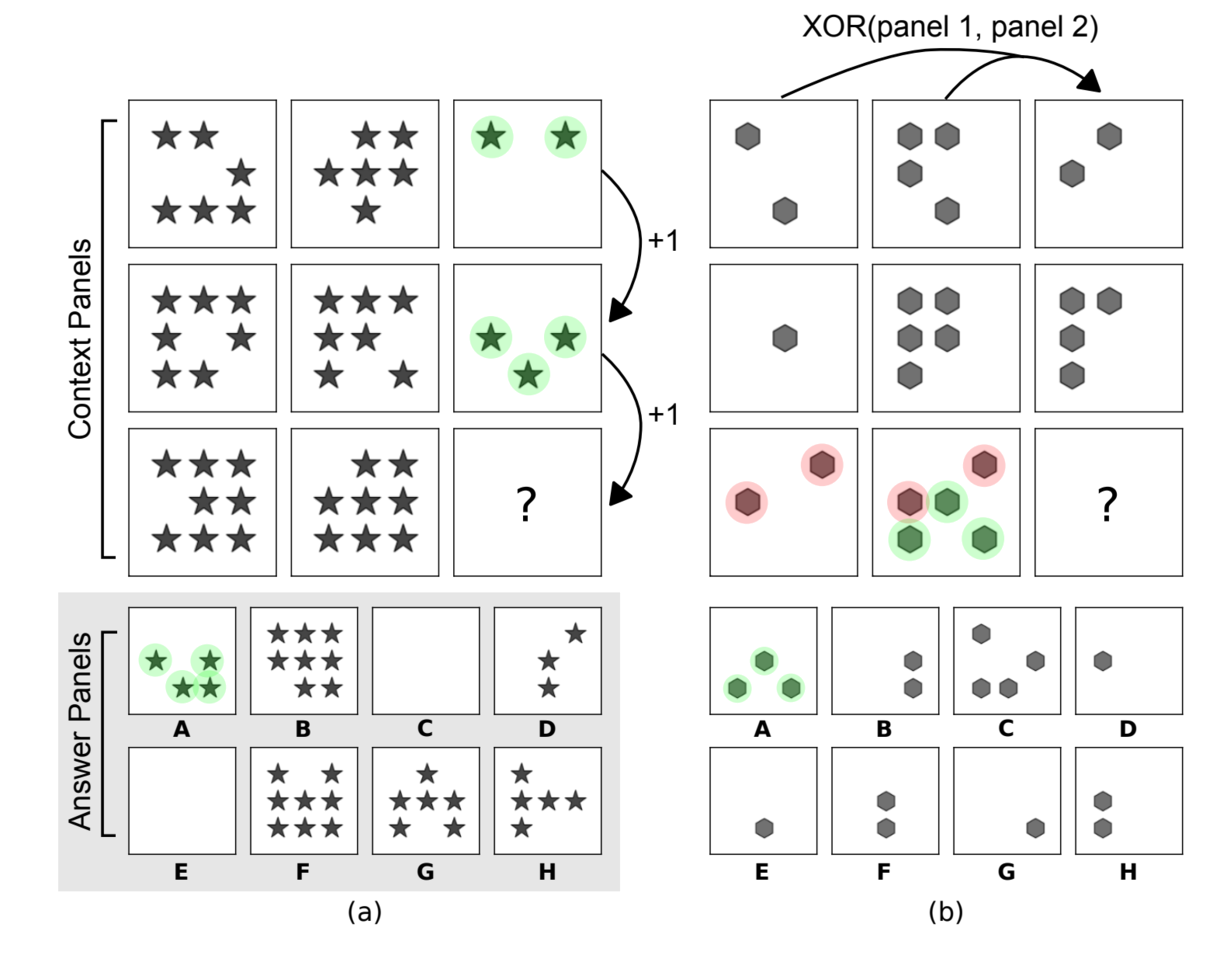

Measuring abstract reasoning in neural networks

David GT Barrett*, Felix Hill*, Adam Santoro*, Ari S Morcos, and Timothy Lillicrap

*equal contribution, alphabetical order

International Conference on Machine Learning (ICML), 2018. Selected for a long talk.

PDF arXiv PMLR GitHub Poster

title = {Measuring abstract reasoning in neural networks},

author = {Barrett, David and Hill, Felix and Santoro, Adam and Morcos, Ari and Lillicrap, Timothy},

pages = {511--520},

year = {2018},

editor = {Jennifer Dy and Andreas Krause},

volume = {80},

series = {Proceedings of Machine Learning Research},

address = {Stockholmsmässan, Stockholm Sweden},

month = {10--15 Jul},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v80/barrett18a/barrett18a.pdf},

url = {http://proceedings.mlr.press/v80/barrett18a.html},

abstract = {Whether neural networks can learn abstract reasoning or whether they merely rely on superficial statistics is a topic of recent debate. Here, we propose a dataset and challenge designed to probe abstract reasoning, inspired by a well-known human IQ test. To succeed at this challenge, models must cope with various generalisation ’regimes’ in which the training data and test questions differ in clearly-defined ways. We show that popular models such as ResNets perform poorly, even when the training and test sets differ only minimally, and we present a novel architecture, with structure designed to encourage reasoning, that does significantly better. When we vary the way in which the test questions and training data differ, we find that our model is notably proficient at certain forms of generalisation, but notably weak at others. We further show that the model’s ability to generalise improves markedly if it is trained to predict symbolic explanations for its answers. Altogether, we introduce and explore ways to both measure and induce stronger abstract reasoning in neural networks. Our freely-available dataset should motivate further progress in this direction.}

}

DeepMind Blog: Measuring abstract reasoning in neural networks

VentureBeat New Scientist Futurism CIO Dive Two Minute Papers

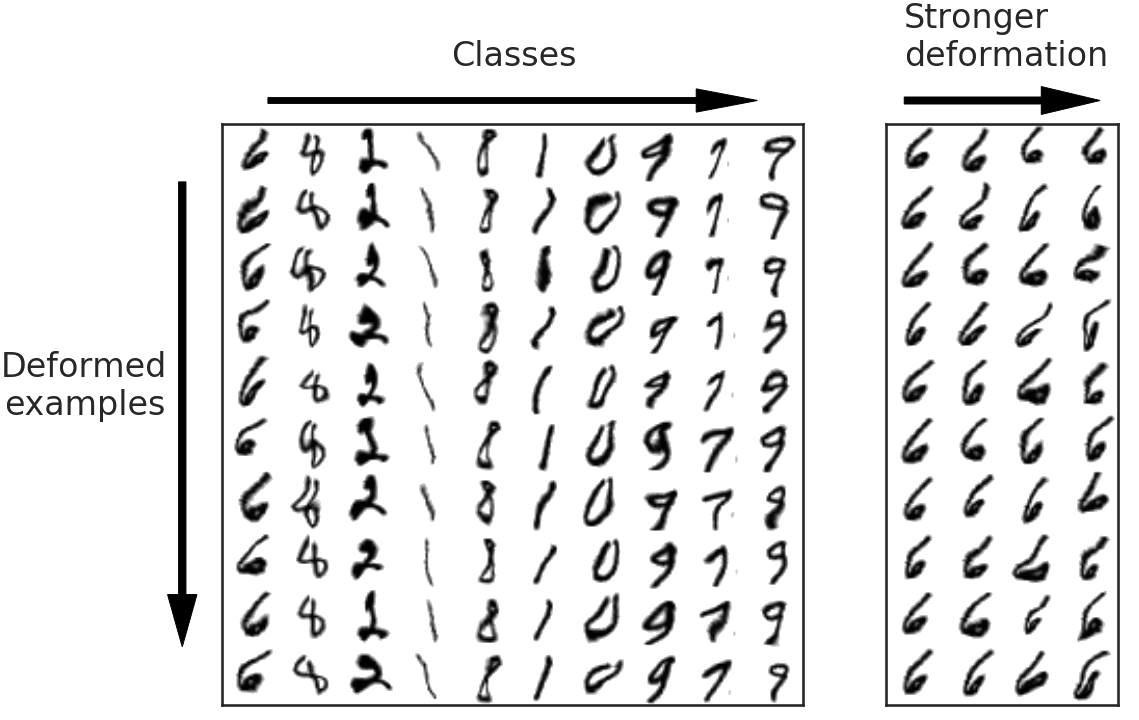

Pooling is neither necessary nor sufficient for appropriate deformation stability in CNNs

Avraham Ruderman, Neil C Rabinowitz, Ari S Morcos, and Daniel Zoran

arXiv, 2018.

PDF arXiv

title={Pooling is neither necessary nor sufficient for appropriate deformation stability in CNNs},

author={Avraham Ruderman and Neil C. Rabinowitz and Ari S. Morcos and Daniel Zoran},

year={2018},

eprint={1804.04438},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

On the importance of single directions for generalization

Ari S Morcos, David GT Barrett, Neil C Rabinowitz, and Matthew Botvinick

International Conference on Learning Representations (ICLR), 2018

Also appeared in:

NIPS Workshop on Deep Learning: Bridging Theory and Practice, 2017.

PDF arXiv OpenReview Poster

morcos_single_directions_iclr_2018,

title={On the importance of single directions for generalization},

author={Ari S. Morcos and David G.T. Barrett and Neil C. Rabinowitz and Matthew Botvinick},

booktitle={International Conference on Learning Representations},

year={2018},

url={https://openreview.net/forum?id=r1iuQjxCZ},

}

DeepMind Blog: Understanding deep learning through neuron deletion

BBC The Register Silicon Angle Analytics Vidhya

2016

History-dependent variability in population dynamics during evidence accumulation in cortex

Ari S Morcos and Christopher D Harvey

Nature Neuroscience vol. 19, no. 12, pp. 1672–1681, 2016. doi: 10.1038/nn.4403.

PDF Nature Neuroscience

title={History-dependent variability in population dynamics during evidence accumulation in cortex},

author={Morcos, Ari S and Harvey, Christopher D},

journal={Nature neuroscience},

volume={19},

number={12},

pages={1672--1681},

year={2016},

publisher={Nature Publishing Group}

}

Harvard Medical School Press Release

News and Views: Many paths from state to state

Matthew T Kaufman and Anne K Churchland

Nature Neuroscience vol. 19, no.12, pp. 1541–1542, 2016. doi:10.1038/nn.4440

PDF Nature Neuroscience

2015

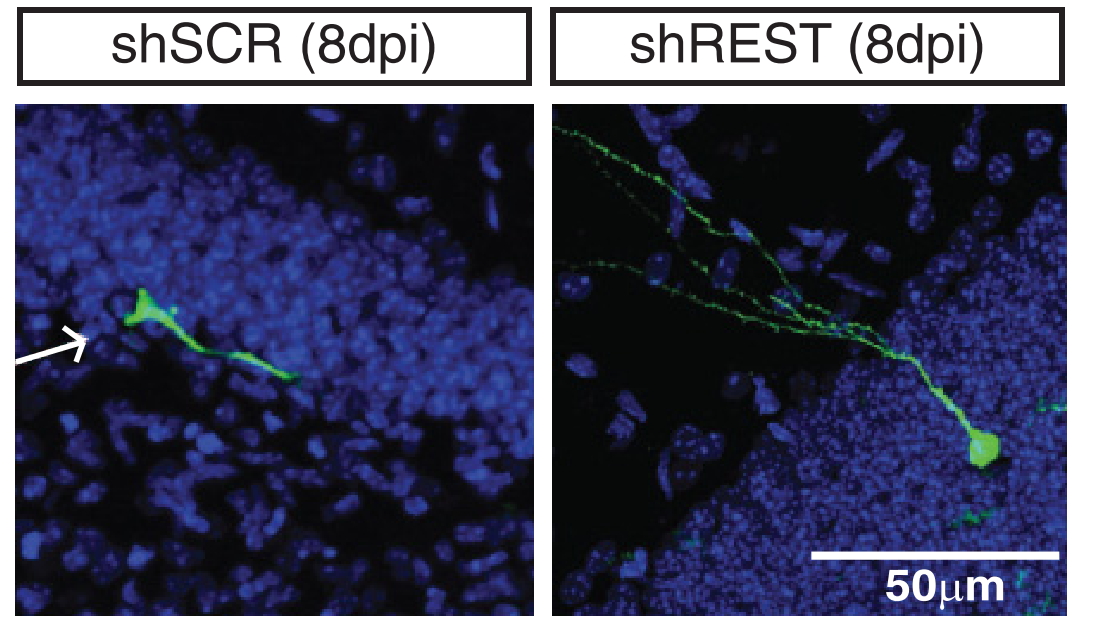

REST regulates non-cell-autonomous neuronal differentiation and maturation of neural progenitor cells via Secretogranin II

Hyung Joon Kim, Ahmet M Denli, Rebecca Wright, Tithi D Baul, Gregory D Clemenson, Ari S Morcos, Chunmei Zhao, Simon T Schafer, Fred H Gage, and Mohamedi N Kagalwala

Journal of Neuroscience, vol. 35, no. 44, pp. 14872–14884, 2015. doi: 10.1523/JNEUROSCI.4286-14.2015.

PDF Journal of Neuroscience

author = {Kim, Hyung Joon and Denli, Ahmet M. and Wright, Rebecca and Baul, Tithi D. and Clemenson, Gregory D. and Morcos, Ari S. and Zhao, Chunmei and Schafer, Simon T. and Gage, Fred H. and Kagalwala, Mohamedi N.},

title = {REST Regulates Non{\textendash}Cell-Autonomous Neuronal Differentiation and Maturation of Neural Progenitor Cells via Secretogranin II},

volume = {35},

number = {44},

pages = {14872--14884},

year = {2015},

doi = {10.1523/JNEUROSCI.4286-14.2015},

publisher = {Society for Neuroscience},

abstract = {RE-1 silencing transcription factor (REST), a master negative regulator of neuronal differentiation, controls neurogenesis by preventing the differentiation of neural stem cells. Here we focused on the role of REST in the early steps of differentiation and maturation of adult hippocampal progenitors (AHPs). REST knockdown promoted differentiation and affected the maturation of rat AHPs. Surprisingly, REST knockdown cells enhanced the differentiation of neighboring wild-type AHPs, suggesting that REST may play a non{\textendash}cell-autonomous role. Gene expression analysis identified Secretogranin II (Scg2) as the major secreted REST target responsible for the non{\textendash}cell-autonomous phenotype. Loss-of-function of Scg2 inhibited differentiation in vitro, and exogenous SCG2 partially rescued this phenotype. Knockdown of REST in neural progenitors in mice led to precocious maturation into neurons at the expense of mushroom spines in vivo. In summary, we found that, in addition to its cell-autonomous function, REST regulates differentiation and maturation of AHPs non{\textendash}cell-autonomously via SCG2.SIGNIFICANCE STATEMENT Our results reveal that REST regulates differentiation and maturation of neural progenitor cells in vitro by orchestrating both cell-intrinsic and non{\textendash}cell-autonomous factors and that Scg2 is a major secretory target of REST with a differentiation-enhancing activity in a paracrine manner. In vivo, REST depletion causes accelerated differentiation of newborn neurons at the expense of spine defects, suggesting a potential role for REST in the timing of the maturation of granule neurons.},

issn = {0270-6474},

URL = {https://www.jneurosci.org/content/35/44/14872},

eprint = {https://www.jneurosci.org/content/35/44/14872.full.pdf},

journal = {Journal of Neuroscience}

}

Workshop publications, theses, and abstracts

The generalization-stability tradeoff in neural network pruning

Brian R Bartoldson, Ari S Morcos, Adrian Barbu, and Gordon Erlebacher

ICML Workshop on Understanding and Improving Generalization in Deep Learning, June, 2019. Long Beach, CA, USA.

NeurIPS Workshop on Science meets Engineering of Deep Learning, December, 2019. Vancouver, Canada.

PDF Understanding and Improving Generalization in Deep Learning Workshop Science Meets Engineering of Deep Learning Workshop

On the importance of single directions for generalization

Ari S Morcos, David GT Barrett, Neil C Rabinowitz, and Matthew Botvinick

NIPS Workshop on Deep Learning: Bridging Theory and Practice, December, 2017. Barcelona, Spain.

PDF Bridging Theory and Practice Workshop

Population dynamics in parietal cortex during evidence accumulation for decision-making

Ari S Morcos

PhD Thesis, Harvard University. April, 2016.

Abstract PDF

title={Population dynamics in parietal cortex during evidence accumulation for decision-making},

author={Morcos, Ari Simon},

year={2016}

}